Tweets on the Dark Side of the Web

Human and robots in the industry of political propaganda

Research: Kostas Zafeiropoulos, Elvira Krithari, Janine Louloudi, Nikos Morfonios,

Maria Sidiropoulou, Ilias Stathatos.

This research was conducted in collaboration with Dimitris Papaevagelou, software engineer

and co-founder of MediaWatch.io, Civic Information Office.

Date of publication: May 20, 2019.

Fake news, the uncomfortable truth

Fake news, either blatant lies or falsified events, taken out of the context of their creation, just as propaganda itself, were not born in the 21st century, not even in the 20th century. And social media are not to be blamed for their existence. Long before the emergence of sensationalist tabloids, TV talk shows and fake news on social media, there was William Randolph Hearst (1863-1951): the first press tycoon, a manufacturer of news and terrorizing headlines, a champion of “real life” stories based on the holy trinity of sex, crime and scandals.

The person behind “Citizen Kane” purportedly believed that “news is what somebody does not want you to print. All the rest is advertising”. Hearst’s wire to Frederic Remington, an artist sent by Hearst to Havana, who asked his employer to return from a quiet and boring Cuba, has become legendary: “Please remain. You furnish the pictures and I’ll furnish the war”. And the (Spanish-American) war was indeed furnished.

Nor is the concept of propaganda a recent invention. Mass information and propaganda are historically determined social phenomena, intertwined with capitalism. Our talent in gossip is also not new, nor is it necessarily a bad thing. It is what makes us able to construct networks in societies too large to support personal relations among all their members; the “imaginary realities” that we form and accept, play a crucial role in the evolution of our societies.

Read More

But the last 10-15 years have witnessed enormous changes: an ever-faster pace of news dissemination, a massive volume of digital information, a growing mistrust in traditional media, a worsening of journalists’ work conditions, a spectacular rise of information on the Internet. According to a scientific report by the Joint Research Center of the European Commission (April 2018), two out of three Internet users prefer to have access to news through platforms based on algorithms, such as search engines, news aggregators and especially social networks. Social networks enjoy a rapidly growing share in opinion making, especially in younger ages, in the economically active population and in urban centers.

Political campaigning has long incorporated predominance in the digital sphere as a main strategy. The web offers political marketing a huge advantage: bigger and faster impact with smaller cost. In this sphere, automation without constant human presence succeeds in disseminating all kinds of messages by a relatively small number of individuals to a real mass audience with great speed. And there is no better strategy than to combine automated processes with accounts of actual persons that have already formed a large crowd of followers.

“How much of the Internet is fake?”, was the question asked by “New York Magazine” on December 26 2018, in reference to studies according to which less than 60% of the total web traffic is human.

Are you certain that what you read every day on your social media pages is real? This question was the starting point for the MIIR team to attempt the first in-depth and data-based journalistic research about the fake and automated accounts on Twitter in Greece, the battlefield of an obscure war of political propaganda.

Our months-long investigation describes the process of opinion-making on social media, with a focus on Twitter, through the construction of virtual digital networks that aim at propaganda on behalf of politicians and political parties, as well as at the promotion of commercial TV productions. Based on quantitative and qualitative data, we are unveiling the illegitimate ways in which Greek political parties, MPs, candidates and influencers, unknown and high-profile alike, rely on automated systems (Bots) in order to promote their positions, overwhelm their political adversaries and, first and foremost, influence those who set the political agenda.

So, are you certain that what you read every day on the web is written by actual persons?

Index

The numbers game

Making noise on the web

A group of leading scholars warned in a recent study that the use of algorithms for the dissemination of political propaganda is “one of the most powerful new tools against democracy”. A team of professors at the Oxford Internet Institute between 2015-2017 analysed tens of millions of posts on seven social media platforms in nine countries during political crises and elections. The study focused on computational propaganda, namely the way in which algorithms, automations and human interventions are used to intentionally spread disinformation on social media.

Message Amplification

“People try to spread disinformation everywhere on the web, using every available platform”, we are told by Ben Nimmo, Senior Fellow for Information Defense at the Digital Forensic Lab (DFRLab) of the Atlantic Council, who studies the relationship between social media and campaigns of digital propaganda.

One of the main conclusions he has reached after studying the dissemination of fake news worldwide during important events such as the 2016 US election, the Russian invasion in Ukraine, as well as the recent election in India -a country of one billion people- is this: the sources of a fake news story may vary, but the key for its successful dissemination is globally the same, and it is none other than automation.

“Automation does not create fake stories, but it does work as an amplifier for small groups. Particularly on social media, we see that some people are able to multiply a message by simply creating a cluster of fake accounts and adding some form of automation. The goal, in other words, is not to write something to be seen by some users only, but to make a lot of posts so that many people take notice and tell many more that this is a trending subject, something widely talked about. What we have here is a game of numbers. If you create a sufficient number of false accounts and automate them, then there is a chance that they appear on the list of trending subjects. Social media is therefore the place where, with the proper tactics and five persons, you can generate the impression that five million people are talking about something”, Nimmo explains. And he adds that this kind of manipulation of social media has been observed as a distinctive modus operandi of marginal, especially far-right political groups.

Read More

Division of Labor

“This helps in agenda-setting – that is in legitimizing a series of issues in public opinion”, we are told by Nikos Smyrnaios, professor of Political Economy and Sociology of Media and the Internet at the University of Toulouse. Adds Smyrnaios: “Television may still be the first medium of information, but the Internet is clearly the one that sets the trends today.

There is a kind of informal division of labor among social media. Facebook may be more popular and its users may represent more accurately the general population, but if someone wishes to influence public opinion, as we saw with Trump’s election and with Brexit, their political choice will be Twitter, with open or secret campaigns”.

But how is this possible? The concept of automation is globally a synonym of the term “bots”. In social media, a bot is an account programmed to operate in an automated way through a computational algorithm.

Arena of Activity

All of its operations are programmed actions and reactions. Says Nimmo: “Think of it as a social media account on automatic pilot”, adding that the most obvious bots are found on Twitter. “It’s easier to create large numbers of bots on Twitter than on any other social media platform because access to data is much more open there”, says Nimmo, stressing, along with professor Smyrnaios, as a second advantage of Twitter the fact that it is akind of “niche market”, where one can easily find a target group for bots: politicians, journalists and other public figures that can readily communicate a message to a mass audience. “These are the so-called influencers, users who have ways to influence public opinion, giving the impression that something is going on, that there is a ‘buzz’ around an issue”.

Before the 2016 US presidential election, two thirds of the total number of bots on Twitter supported Donald Trump. And yet, contrary to the mainstream narrative, Hilary Clinton’s bots were much more powerful in influencing public opinion than Donald Trump’s ones (“Even a few bots can shift public opinions in big ways”, The Conversation, 5 November 2018). As a recent study by four researchers from Cornell University showed (“The Impact of Bots on Opinions in Social Networks”, October 2018), although there were twice as many bots supporting Trump as there were supporting Clinton, the latter produced almost twice as large a shift in opinions as the former ones. However, this was not enough to alter the result of the election, not even in the USA, where over 12% of the population follow the news on Twitter.

Before the 2016 US presidential election, two thirds of the total number of bots on Twitter supported Donald Trump. And yet, contrary to the mainstream narrative, Hilary Clinton’s bots were much more powerful in influencing public opinion than Donald Trump’s ones (“Even a few bots can shift public opinions in big ways”, The Conversation, 5 November 2018). As a recent study by four researchers from Cornell University showed (“The Impact of Bots on Opinions in Social Networks”, October 2018), although there were twice as many bots supporting Trump as there were supporting Clinton, the latter produced almost twice as large a shift in opinions as the former ones. However, this was not enough to alter the result of the election, not even in the USA, where over 12% of the population follow the news on Twitter.

Anonymity

Nimmo warns that it is not easy to detect bots, yet he explains that there are three distinctive features that betray them, the “three As”: Anonymity, (unusual) Activity and (message) Amplification. “A bot is detected by the number of its actions within a day. E.g., if an account makes 2,000 posts per day, as I have personally witnessed, clearly this cannot be human behavior. On the other hand, anonymity is often betrayed by the name of the account, if it is a simple chain of letters or numbers, or by its picture. But the most important feature for detecting a bot is its own actions, whether an account posts its own tweets or simply reproduces or reacts to other accounts. If all three features are present, then we are certainly dealing with a bot”.

As experienced users and various people from the field of social media administration admitted to the MIIR team, creating bots is a fairly simple procedure, which, however, requires some basic programming skills. But the crucial thing is this: to create many new accounts that are realistic enough to look like they are run by an actual person, while they are operated in an automated way.

Credit: The New York Times

The very number of followers and the actions of a social media account, elements that are important for the promotion of political or commercial content, may become themselves an area of dubious entrepreneurial activity. This was the subject of the Pulitzer-nominated research “The Follower Factory”, by a team of reporters at “The New York Times”, which investigated the case of a company called Devumi, and unveiled the “black” economy of bots.

Lists for Sale

Devumi sold thousands of bots to many companies, artists, athletes, political commentators and scholars who wished to vaunt large numbers of followers on Twitter. The extent of the enterprise was and still is huge. In order to make the accounts look realistic, Devumi “stole” the profiles of real users – many of whom were underage – to create its own automated accounts. Then, the company programmed the stolen accounts to follow and publish its clients’ posts.

Companies such as Devumi usually do not develop their own bots, but prefer to buy them or use the services of “click farms”: plants – usually in Southern-Asia countries – where underpaid workers sit in front of tens of computers and click or re-post the same content, for hours.

A similar web market has been set in Greece too, with websites that sell followers, likes, views, retweets stemming from “real accounts” on any social media platform. Most of these websites guarantee the authenticity of the accounts they sell. However, an employee of such a website who wished to remain anonymous assured us that the “authenticity” of the accounts in question consists in the simple fact that they are produced by click farms in Southern Asia.

Read More

According to information we have collected from professionals in the field of digital marketing and communication, the use of bot accounts for promoting products and services is a reality in Greece, especially in the case of new accounts (e.g., new companies) that aim at rapidly increasing their “visibility” on the web. Several professionals admitted that even politicians, especially during electoral campaigns, have asked them for this kind of services. However, social media administration -a service usually provided by such companies- and creating bots in order to increase the appeal of an account are two very different things.

“As a marketing specialist, I have never advised a client to do it. The quality of these accounts is very dubious. It’s better to have a minimized yet accurate image, than an overblown one”, we are told by Spyros Tzortzis, Social Media Manager & Trainer at Sociality, a Cooperative for Digital Information, who explains that the usual thing is to assign the creation and administration of bots to programmers who work under non disclosure agreements.

“Ghost” Accounts The market of bots and automated operations flourished on an international scale in the 2010s. Today, there are a lot of websites online where one can buy the most popular chatbots for corporate social media (namely, bots that reply automatically and with human-like behavior to questions asked by clients online), and also secure with a single click hundreds or thousands of likes and retweets for their social media accounts.

Ben Nimmo also points to another phenomenon identified by the DFRLab team: the black market of bots, which is based on old and inactive accounts of real users. Groups of hackers active in the so-called “dark web” detect these accounts, hack them and then either sell them to persons who are looking for bots or reprogram them and use them for their own purposes. “This explains why we often find bots that, e.g., may have started to tweet for the last couple of days at a rate of 300 messages per day, although their accounts were created in 2012. There are many ‘ghost’ accounts of this kind that are readily exploited by expert hackers”.

For Nimmo, the commercialisation of bots means that the Internet is full of bots and botnets, the purpose and focus of which change -e.g. from politics to information through commerce- according to the wishes of the varying employers of their administrators. “Many bots are clearly commercial. For example, one may have 10,000 bots and offer a package of 10,000 retweets for 60 dollars. This person doesn’t care at all about the content republished by the bots; he’s in it for the money. So there are many bots that are totally apolitical, immoral, and their sole purpose is to make money for their administrator”, Nimmo explains.

He goes on to add that it is easier to rent a botnet than to build one, and this is actually the most widespread practice today, and the one he has encountered many times in his research. “When detecting a bot that amplifies a particular political hashtag on Twitter, it is important to see what else it amplifies as well. If it reproduces political messages stemming from a single political party, then it was probably created by some supporter of the party in question. But it might be a bot that at one moment ‘likes’ some political content, and the next some high heels in Moscow, or tweets about a summer villa in Mexico or about Norwegian salmon. There is no logical connection between the two. In that case, it is almost certain that what we have here is a commercial dot, and someone has simply paid it to ‘like’ a particular political post”.

Playing “Cat and mouse”

Cyborg bots

Twitter threatens to delete the accounts that break its rules, which are pretty clear:

It is forbidden:

– to create fake and deceiving accounts that aim at spamming and insulting or disruptive behaviour, including attempts at manipulating conversations on Twitter;

– to buy and sell or seize other Twitter usernames;

– to buy and sell interactions among accounts on the platform. In particular, buying followers, retweets and likes usually means that someone is buying bots or hacked accounts.

The outcry for the influence of bots on the result of the 2016 US election and the fear that similar phenomena may occur before other elections, especially the 2019 EU election, led to the implementation of stricter methods of detection and to the immediate deletion of bot accounts on social media. “Under the pressure of Twitter, the use of bots is not so easy now as it was before. We see ever more often botnets being deleted within two or three days after their creation”, says Ben Nimmo, referring to a game of “cat and mouse” between the manufacturers of bots and the social media platforms.

Read More

“I was stunned by how easy it was to detect certain categories of fake accounts”, we are told by Mark Hansen, professor of Statistics and head of the team from Columbia University that last July conducted the research for “The Follower Factory”, in collaboration with “The New York Times”. However, he thinks that in order for someone to maximize the appeal of a message, the “farms” of bots are not enough, stressing that there are still holes in the system social media use to detect malicious bots.

This “persecution” of bots from Twitter led to the development of the so-called “cyborg bots”. This is a half human half machine hybrid, an automated operation sometimes supported by humans and aimed at incorporating elements of a normal user, thus bypassing control filters. In this case, accounts for a while show all the signs of the increased activity of a bot programmed through computational commands, and then decrease significantly and adapt their actions, mimicking the activity of an average human. “It is as if the algorithm is put on automatic pilot for a while, then the automatic pilot is switched off, and then maybe switched on again”, says Nimmo. “During the recent US election, I was watching a Twitter account that made 1,500 posts per day, all of them retweets. This was clearly a bot. After the election, it returned to 2-3 posts per day, which moreover looked like original ones, written by a real user. A few months later, it started tweeting again on a massive scale. This kind of activity does not allow the platform to determine with certainty if it is generated by a bot, so it’s not easy to go on and delete the account”.

Yet all this underground activity does not take place solely in the distant USA. As we found out during our research, it occurs on a large scale in Greece as well, involving well-known politicians, large political parties and celebrities.

– 4 investigative journalists

– 2 fact-checkers

– 1 software engineer – developer

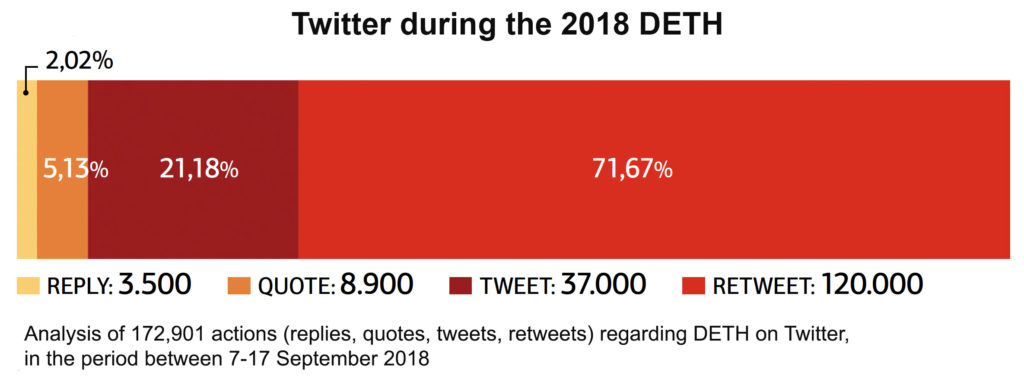

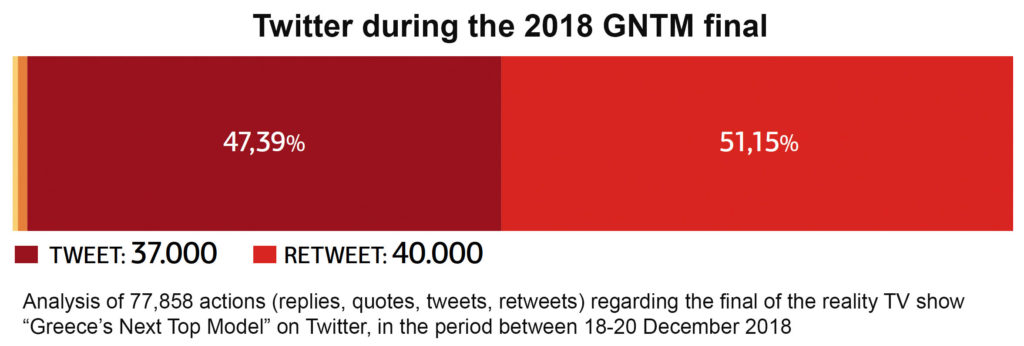

– 2 events studied: the 2018 Thessaloniki International Fair (Diethnis Ekthesi Thessalonikis – DETH) and the 2018 final of the Reality TV show “Greece’s Next Top Model” (GNTM)

– 28.419 unique accounts were drawn (20.178 from DETH and 8.241 from GNTM)

– 3.698 twitter accounts analysed

– 250.759 unique actions (tweets, retweets, replies, quotes) were examined

– 350 hours of evaluating accounts

– 20 interviews

– 5 months of research

– 1 algorithmic visual graph depicting the networks of all the accounts

Our team examined only Twitter, as this is the most accessible and politicized social medium. Moreover, using the database of Twitter Streaming API (https://developer.twitter.com/), one can gather, in full compliance with the existing rules, a massive amount of data and study social-political issues.

We chose as a case study one of the most important pre-scheduled Greek political events of 2018, which we knew beforehand would generate an intense political controversy: the Thessaloniki International Fair in September 2018.

Read More

This fair was marked, among others, by much debate about the Prespa Agreement of June 2018. Based on software that we developed (mostly in the Python and Go programming languages; see twitterfarm, free open-sourced software, https://github.com/andefined/twitterfarm), we drew all the tweets, retweets, replies, quotes and mentions from 7 September (before the prime minister’s speech at the annual exhibition) to 17 September 2018 (after the opposition leader’s speech), using specific keywords: #ΔΕΘ, #ΔΕΘ2018, #DETH, #Μακεδονία, #Macedonia, #Συμφωνια_Πρεσπων [Prespa_Agreement].

We collected about 173,000 separate actions from roughly 20,000 different accounts. In the process, we relied solely on the Twitter official API, using data and tools accessible to everyone. We repeated the same procedure during the days of the final of the TV reality show Greece’s Next Top Model (18-20 December 2018), where we drew about 8,200 accounts and 78,000 actions.

Our team examined only Twitter, as this is the most accessible and politicized social medium. Moreover, using the database of Twitter Streaming API (https://developer.twitter.com/), one can gather, in full compliance with the existing rules, a massive amount of data and study social-political issues.

We chose as a case study one of the most important pre-scheduled Greek political events of 2018, which we knew beforehand would generate an intense political controversy: the Thessaloniki International Fair in September 2018. This fair was marked, among others, by much debate about the Prespa Agreement of June 2018. Based on software that we developed (mostly in the Python and Go programming languages; see twitterfarm, free open-sourced software, https://github.com/andefined/twitterfarm), we drew all the tweets, retweets, replies, quotes and mentions from 7 September (before the prime minister’s speech at the annual exhibition) to 17 September 2018 (after the opposition leader’s speech), using specific keywords: #ΔΕΘ, #ΔΕΘ2018, #DETH, #Μακεδονία, #Macedonia, #Συμφωνια_Πρεσπων [Prespa_Agreement].

The Procedure

Next, after classifying the different accounts, we selected a random sample of 3,698 individual accounts from the repository of the tweets we had gathered. Four journalists analyzed, one by one, roughly 900 accounts each, reading at least 60 tweets from every account, and based on a series of homogenized (both qualitative and quantitative) criteria they categorized the accounts depending on their degree of automation and the type of user (unknown, active user, normal user, semi-automated, automated), as well as the user’s estimated political orientation on a linear axis from Far Left to Far Right. In cases that we were unable to determine the degree of automation or the political orientation of an account based on its past activity, we categorized the account in question as “unknown”. At the end of the procedure, we classified the results in a unified database.

All-purpose Automation

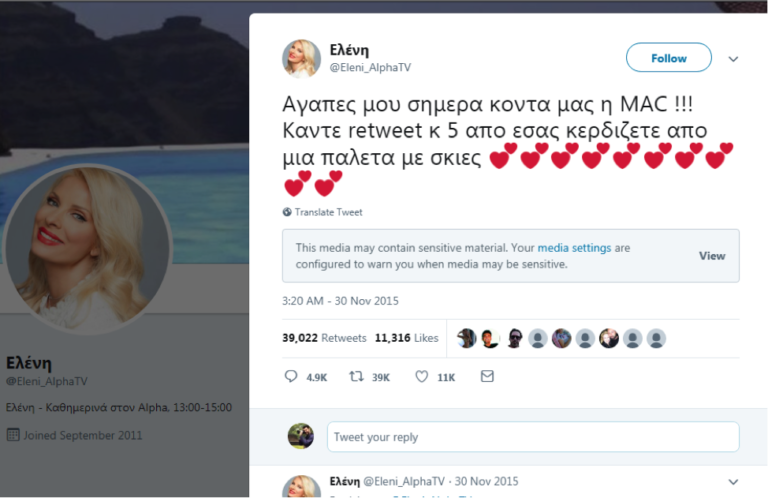

During our research, we contacted many anonymous users of Twitter, well-known web figures with different political views, former members of the communication design teams of political parties, scholars from Greece and from abroad specialized in the subject, as well as professionals in the field of online marketing. Most of them did not wish to reveal their names, and some even expressed their concern that the publication of our research might cause them problems. One of the most experienced professionals in the field told us: “There are people in Greece that find politicians, MPs or candidates and tell them: ‘Hey, I have 300 different fake accounts on Twitter, they are all trolls. Do you want me to make them talk about you or have them slam your political adversaries?’. These guys often enough don’t care about ideologies. They may go today to New Democracy, tomorrow to SYRIZA and the next day to another party. Their credo is ‘whoever books me first’. And then, the same guy that used to sell accounts to politicians may turn to other issues, because ‘why not, I already have the accounts and there is no election anywhere near’. And this is when the promotion of TV shows starts, reality shows, etc.”. This was also when we realized that our working hypothesis had begun to take effect.

LEADERS IN THE WEB WAR AND THE PROMOTION OF PRODUCTS

WHAT WE DETECTED DURING DETH AND THE GNTM FINAL

One of our first important findings was that many accounts were created shortly before the reference period of our research (7-17 September 2019) and they were shut down after a few weeks either by the users themselves or by Twitter. 40.6% or the accounts we analyzed either had no political orientation or could not be categorized on a linear political axis from Far Left to Far Right and the Golden Dawn pro-Nazis. 8.2% of the remaining 59.4% expressed views clearly sympathetic to the communist Left, the extra-parliamentary Left and anarchism. Roughly 14.23% of the accounts expressed views close to the Center-Left, and 19.08% were closer to the liberal Center and the Center-Right. Roughly 14.64% expressed views ranging from ultra-conservatism and right-wing populism to the nationalist and sectarian Far Right. Last but not least, 3.3% of the accounts were either openly supporters of Golden Dawn or sympathetic to Nazi and fascist ideas.

These percentages are only circumstantial; they may be said to bring out an existing tendency, while also embodying the journalists’ subjective judgments. They do not reflect in any case whatsoever a potential mapping of an election result, since the demographic characteristics of Twitter do not suffice to draw such conclusions.

The table above shows the degree of automation of the accounts we collected. In particular:

■ We detected 476 bots out of a total of 3,698 accounts (12.87%).

■ The bots generated 57% of the total actions (tweets, retweets, mentions, quotes, replies, likes) during the reference period.

■ We found bots that supported all the parties of the Greek parliament, except the Union of Centrists [Enosi Kentroon] party.

■ SYRIZA and New Democracy are by far the political parties with the largest share of automated accounts. New Democracy declined to comment on our investigation. SYRIZA denied any connection with automated accounts.

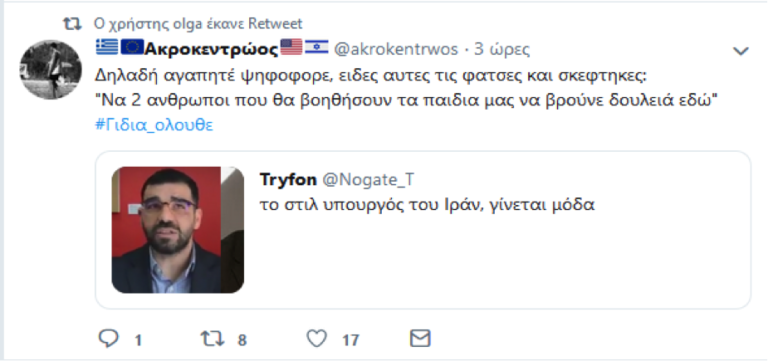

During the prime minister’s speech in DETH, on 8 September 2018, the users’ activity reaches its apex, with retweets owning the largest share. At the beginning of Alexis Tsipras’ speech, on 9 pm, replies are by far exceeded by retweets, a feature often linked with increased automation (bots).

In accordance with Alexis Tsipras’ speech, there is a sharp increase in the total number of actions during Kyriakos Mitsotakis’ two-hour speech at the Vellidion Conference Center on 15 September 2018. Before Mitsotakis’ speech, the number of posts on Twitter was smaller than the number of posts before the prime minister’s speech.

■ During both Alexis Tsipras’ and Kyriakos Mitsotakis’ speeches in the 2018 DETH, including a period of a few hours before and after the speeches, there was a spectacular increase in the number of users’ actions. There is a comparably sharp increase in bots’ activity during the same time-period as well, obviously with a view to political propaganda.

■ Many bots are interconnected and also used to promote TV productions.

■ The important thing is not the absolute number of automated accounts, but the volume and the intensity of the digital noise they produce. A small number of bots is enough to influence public opinion.

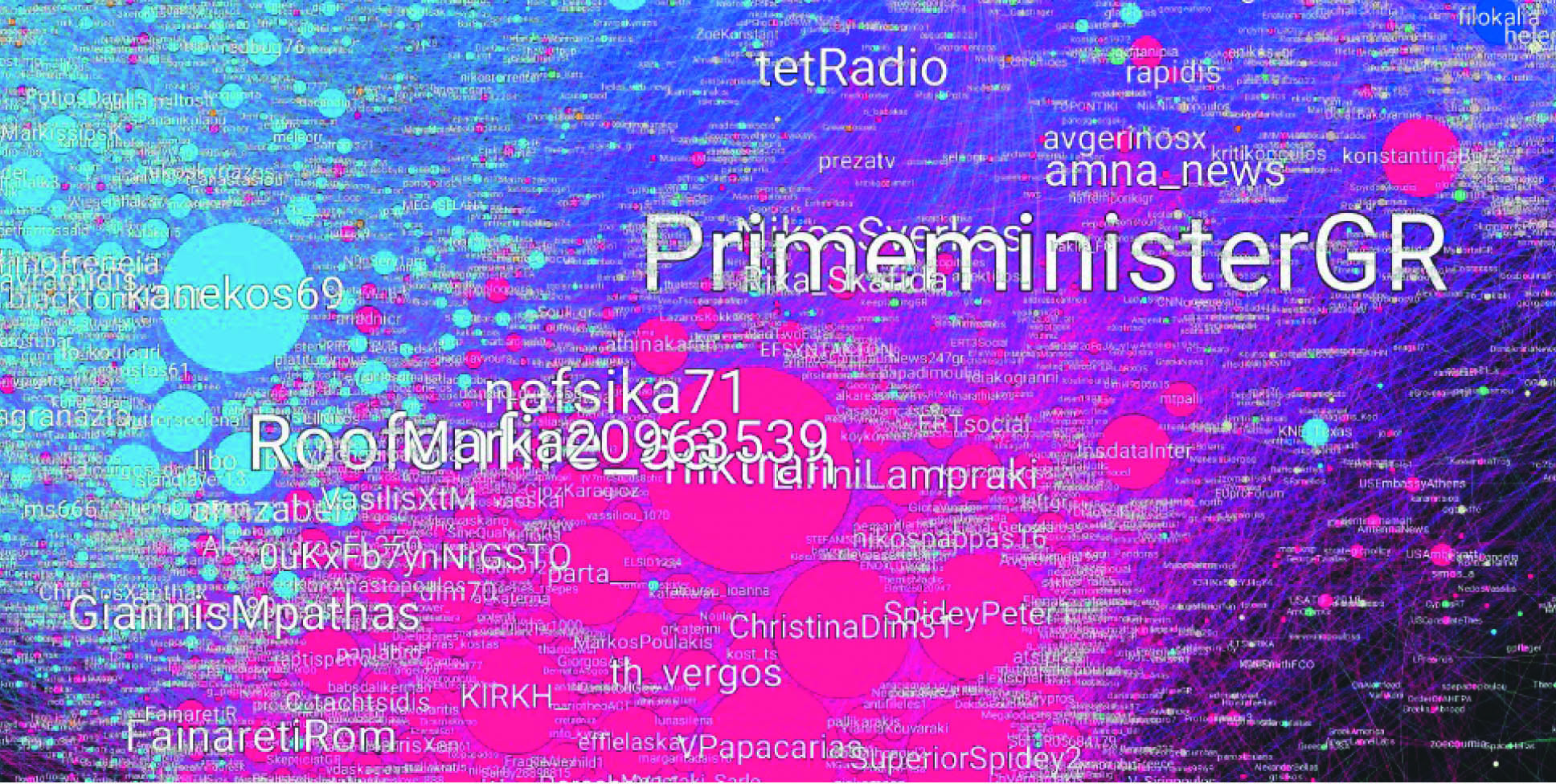

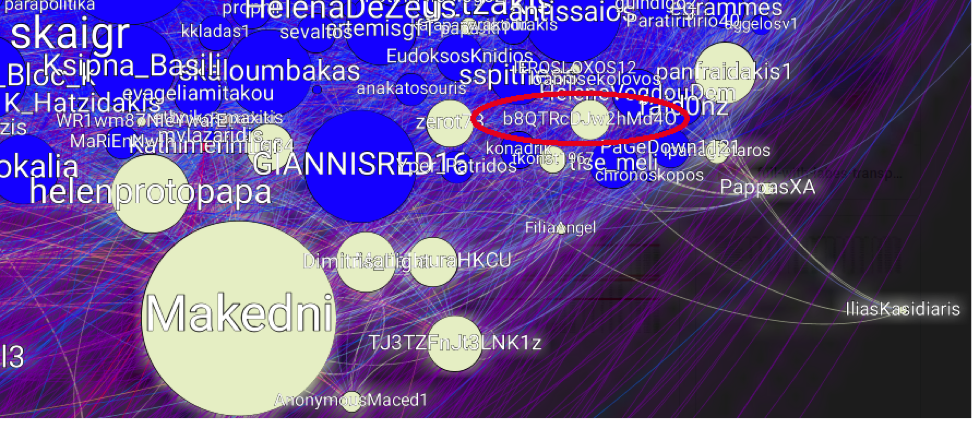

We created a graph to visualize all the clusters of the Greek accounts (both authentic and bots) of our sample. Every node in this graph represents a different Twitter account. Using algorithms, we searched for the forces that attract users to one another, thus forming a cluster, and also the forces that attract their clusters to one another (modularity).

The magnitude of each node (that is, of a Twitter account) depends on the degrees of interactions-interrelations of this account (edges) with all the other accounts within a cluster. In other words, the bigger the name shown on the graph, the larger the number of accounts that have interacted and reproduced its content. Correspondingly, the bigger a node, the larger the number of accounts it has reproduced, thus entering a cluster.

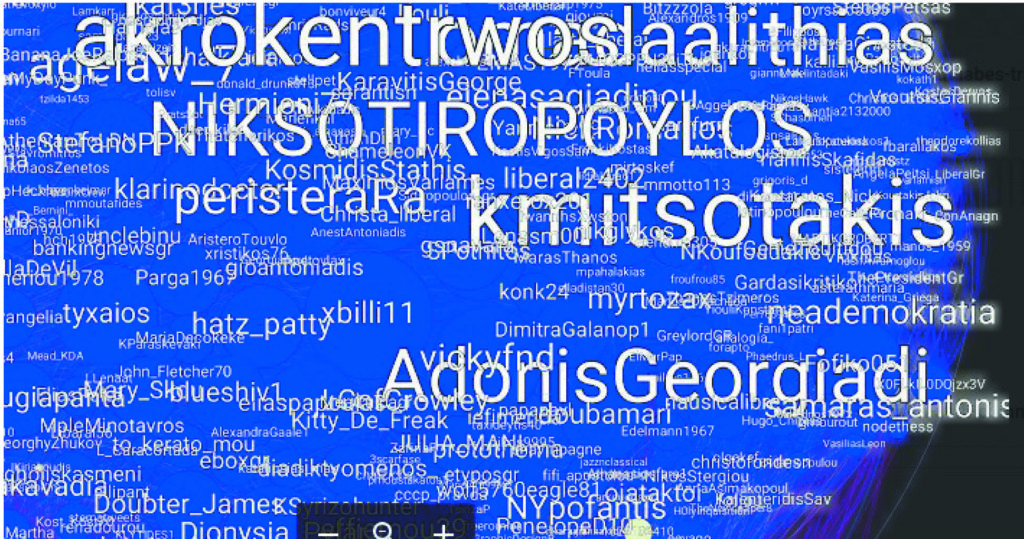

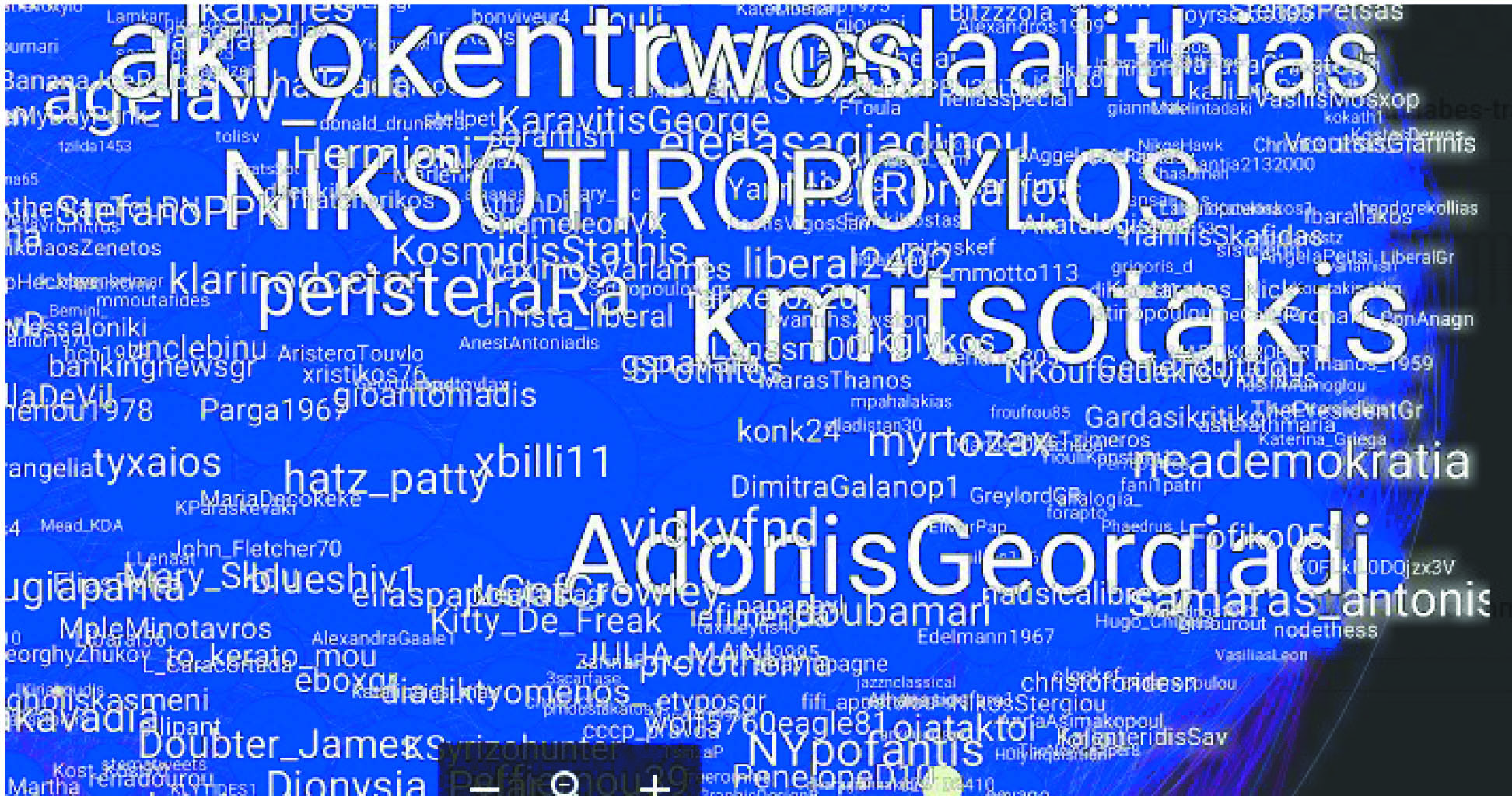

The clusters of Greek accounts on Twitter, both authentic and bots, depending on their mutual interaction and reproduction:

In blue, the Nea Dimokratia cluster; in fuchsia, the SYRIZA cluster; in pink, the cluster of accounts talking about GNTM. In between (in light blue color), we find a whole array of accounts, from active users with varying political views to web influencers interconnected both with real accounts and with bots. In white is the cluster of far-right accounts.

The following graph represents the most influential nodes – namely, accounts, both authentic and bots, with the largest number of actions (tweets, retweets, quotes, mentions) on a daily basis.

But what did we learn from all this?

■ We confirmed through a computational method what we had found through our journalistic analysis: that the two major political parties have the hegemony concerning the total content produced on the web, and they fight each other over it.

■ We confirmed that the political parties, especially the two major ones, are significantly promoted by bots in obscure and illegitimate ways.

■ We found out how particular actual persons (named or anonymous users) are connected with automated accounts.

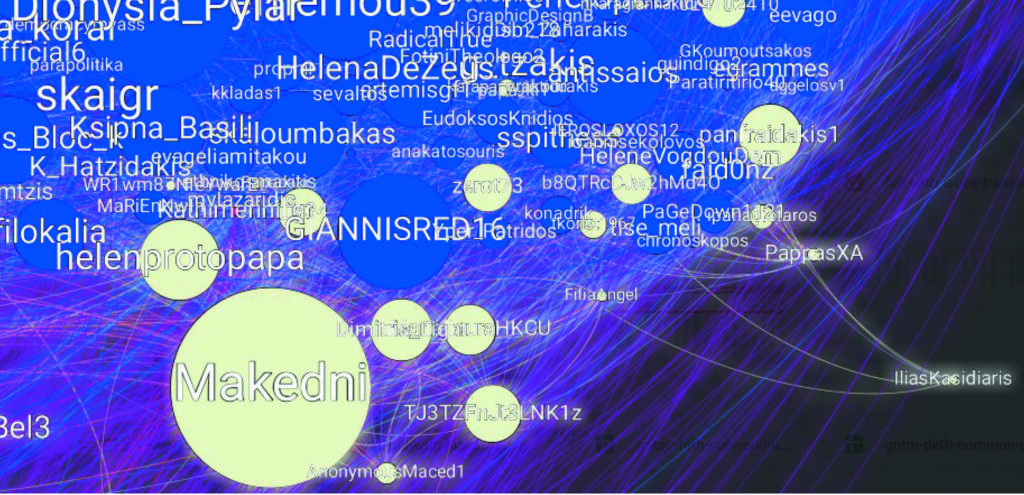

■ We detected 6 major clusters, represented on the graph. We gave each one of the larger clusters a different name. In between the three main clusters (the blue one, New Democracy; the fuchsia one, SYRIZA; the pink one, GNTM), there are the light blue users (nodes), which show high activity but do not belong to any of the three main clusters. As mentioned above, this is an in-between space where one can find a whole array of accounts, from active users with varying political views to web influencers interconnected both with real accounts and with bots. As we already said, the white color next to the cluster of New Democracy represents the cluster of accounts that express nationalist and far-right opinions. The orange color represents the bots that show a generic behavior with standard cues or mutual salutations (good morning, goodnight), in an attempt to pass as real persons.

It is noteworthy that after we constructed the graph that interconnects the accounts, we observed that in the surrounding area or very close to the clusters of New Democracy or SYRIZA there are a few accounts belonging to users that are known not to be supporters of the corresponding political party. This happens because there is a regular interaction among these users (e.g., journalists who reproduce politicians’ tweets or accounts that receive many aggressive comments). This is why Alexis Tsipras’ official account, @PrimeministerGR, is not located at the center of the SYRIZA cluster but in the space between SYRIZA and Nea Dimokratia, since it is obviously reproduced and commented by everyone.

■ Proximity to one of the major clusters does not necessarily mean political affiliation.

■ The far-right cluster (the white one) is located much closer to, and interacts more frequently with, the New Democracy cluster.

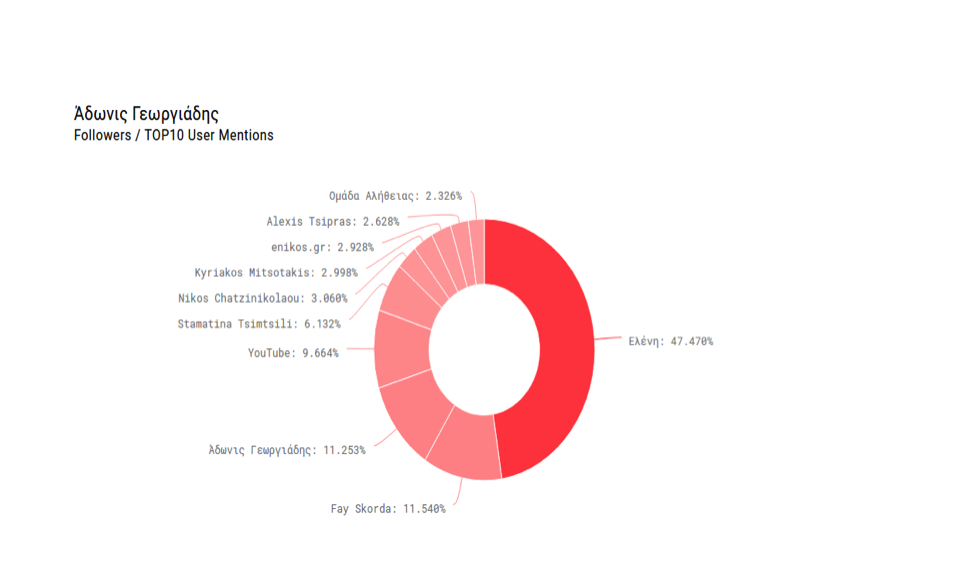

■ As expected, one can see that around the prime minister’s node there are many media close to one another (such as Athens News Agency [APE], the newspapers I Efimerida ton Syntakton [“Ef.Syn.”] and To Pontiki, and the websites cnn.gr, news247.gr, tvxs, zougla, ert3 social, enikos and naftemporiki). For example, “Ef.Syn.” is located closer to the outskirts of the SYRIZA cluster, but not at its center, where one may find Avgi newspaper and the website “To Kouti tis Pandoras” [Pandora’s Box], owned by journalist Kostas Vaxevanis. On the other hand, the account of skai.gr is located closer to the center of the New Democracy cluster, where we also find, besides the president of the party Kyriakos Mitsotakis and its vice-president Adonis Georgiadis, Nikos Sotiropoulos, one of the most active supporters of ND, based in the city of Patras. In the same cluster, one may find the newspapers Parapolitika and Eleftheros Typos, the websites bankingnews.gr, protagon.gr and “The President”, the latter owned by Yiorgos Mouroutis, as well as journalists Yannis Pretenteris and Paschos Mandravelis.

■ It is also worth noting that the general graph does not show, as expected, journalist and publisher Kostas Vaxevanis as an influential actor, although he is personally a highly active user on Twitter (with 425,000 followers and 92,500 tweets). The reason is that during the 2018 DETH K. Vaxevanis’ comments with the DETH hashtag had very few retweets (19) by other users.

[Pics: The clusters of Greek accounts on Twitter, both authentic and bots, depending on their mutual interaction and reproduction; In fuchsia, the cluster of the accounts connected with SYRIZA; The center of the cluster of accounts connected with Nea Dimokratia; The far-right cluster on Twitter]

The far-right cluster on Twitter

The clusters of Greek accounts on Twitter, both authentic and bots, depending on their mutual interaction and reproduction.

In fuchsia, the cluster of the accounts connected with SYRIZA

The center of the cluster of accounts connected with New Democracy

Twitter in Numbers

11,3 BILLION ACCOUNTS WORLDWIDE (AS OF APRIL 2019)

44% OF THESE ACCOUNTS WERE SHUT DOWN WITHOUT EVER POSTING A SINGLE TWEET

330 MILLION MONTHLY ACTIVE USERS

391 MILLION ACCOUNTS WITHOUT A SINGLE FOLLOWER

80% OF THE ACTIVE USERS ACCESS THE PLATFORM THROUGH THEIR MOBILE PHONE

24.6% OF THE AUTHENTICATED ACCOUNTS WORLDWIDE BELONG TO JOURNALISTS

71% OF THE TWITTER USERS READ THE NEWS THERE

83% OF THE WORLD LEADERS HAVE AN ACCOUNT ON TWITTER

106 MILLION PEOPLE WORLD WIDE FOLLOW KATY PERRY, THE MOST POPULAR ACCOUNT WORLDWIDE (FOLLOWED BY JUSTIN BIEBER, SECOND, AND BARACK OBAMA, THIRD)

8-10 MILLION ACCOUNTS PER WEEK ARE CHECKED BY TWITTER AS SUSPECT OF AUTOMATED BEHAVIOR; 75% OF THEM FAIL THE TESTS

5,787 TWEETS ARE POSTED EVERY SECOND, AND 500 MILLION EVERY DAY WORLDWIDE

80% OF THE USERS HAVE MENTIONED AT LEAST ONE BRAND IN THEIR TWEETS

Eleven ways to spot a bot on twitter

Based on our months-long research and the international literature on the subject, we highlight some basic yardsticks that may allow Twitter users to be more cautious, to block or even report to the platform any suspicious accounts and possible botnets. It should be reminded that on Twitter it is not necessary, as is the case on Facebook, for someone to accept you as a “friend”; one may follow any user, except if this user has chosen to keep a private profile (protected tweets).

Activity

This is the basic indicator of possible automation. The average number of a user’s actions per day is easy to calculate, by dividing the total number of actions by the number of days since the creation of the account (Twitter makes visible the date an account was created). The limit that renders an account suspicious for automated behavior may vary. According to the team of the Oxford Internet Institute that conducted the research on computational propaganda on the web, if an account has more than 50 actions per day it is considered to be highly suspicious. According to DFRLab, the limit is 72 actions per day (that is, one action every 10 minutes for 12 hours non-stop). But careful: there are many bots with only a few actions per day, which nonetheless show a sharp increase of their activity during specific events.

Anonymity

Another powerful indicator is a user’s profile. Of course, anonymity in itself does not demonstrate the presence of a bot; however, if the profile photo is missing or if there is no personal data whatsoever, one may reasonably start to become wary. In general, the fewer the personal info, the more the chances of having to do with some kind of automation.

Read More

Amplification

This is also an important indicator. One of the basic tasks of a bot is to promote and boost the spread of a message generated by other users. This is achieved through the reproduction of the original content in its entirety (retweets), through “likes” and also through “mentioning” someone. A standard time sequence in this case would be a series of tens or hundreds of retweets, with word-for-word reproduction of comments from headlines and very few (or no) original comments. The most effective way to detect such a behavior is through a computational scan of a large number of comments. However, even a careful review of someone’s last 5-10 comments (or replies) may often be enough to draw useful conclusions.

Few posts, great results

Bots are effective insofar as they succeed in massively promoting content generated even be a single user. Another way to achieve the same result is to create a large cluster of fake accounts, each one of which will reproduce the same comment (tweet) just once. This would be a botnet in the service of propaganda.

These botnets are easily detectable if the accounts do not show a normal behavior.

For instance, in the USA, on 23 August 2017, an account named @KirstenKellog_ (which has been suspended since) posted a tweet against the ProPublica newsroom organization. This account seemed rather inoffensive as it showed minimum activity: it had posted just 12 tweets, and 11 of them had been deleted. It had a mere 76 followers and did not follow anyone. And yet this one offensive tweet was reproduced positively more than 23,000 times in the blink of an eye.

Fake or stolen profile photo

Many bots are more elaborate and try to hide their anonymity behind real photos from various sources. A good test is the reverse search of the account’s profile photo (“Search Google for Image”, or, on other browsers, right click on the photo and then copy photo location and paste on the search bar of Google photos). It is quite common for accounts with male names to use fake photos of beautiful women. The reverse applies too, although not nearly that often.

Alphanumerics after @

The use of alphanumerics to form a word or a phrase with no meaning instead of a name shows that the account in question has been generated by a character generator with a view to automation. These “names” look something like that (source: DFRLab):

LvppLxATOrh5dIE

BVBOPkbWksovV

MZKXUU5yiLfyll5

AyGyMHIHKQbL6X7

BSkhBDCfR0N0gIU

aOavx5DuoPrIAr3

In our research, we detected tens of such accounts. An example: the account with the unintelligible name @ChuckCh00323644, which has now been suspended. It was created just a month before the 2018 Thessaloniki International Fair with a specific goal – to reproduce political comments against SYRIZA and in favor of Kyriakos Mitsotakis. On the other hand, during the same period, there also was @X34iNxBHcYJ4qY4. We read the comments of this highly active fake accounts one by one, only to observe that this latter bot posted non-stop during the 2018 DETH in favor of Alexis Tsipras and his government. Shortly afterwards, the account was suspended.

A Babel of languages

It is common for automated commercial accounts that sometimes are also active in political propaganda to make an extremely wide variety of posts in different languages (e.g., translations of the same tweet into Arabic, English, Spanish and French), in order to gain access to wider audiences.

Moreover, one may notice that many bots, although their mother tongue is, e.g., Greek, tend to reproduce content in different languages, since they are programmed to react to certain keywords or hashtags (#).

Commercial-unoriginal content

The distinctive presence of ads is a classical indicator of a cluster of automated accounts. It is not uncommon for such commercial accounts to become for a while political bots, and then return to their normal commercial activity.

During our research we did not come across many exclusively commercial accounts; for the most part, we found hundreds of accounts with unoriginal content (standard “good morning-goodnight” exchanges between users, generic cues, proverbs, cracked-barrel philosophy, beautiful landscapes, pets, top models, etc.)

Retweets and likes

Another good way to detect a bot is to notice the comments it reproduces. There are thousands of accounts that may have been programmed to retweet or “like” a particular comment, sometimes at the exact same moment and in the exact same way.

A semiology of tweets

Automated accounts, especially the least elaborate ones, are designed to perform very specific tasks; inevitably then, they often seem “obsessed” with a particular subject, and they do not post anything else.

The use of human language is still too complicated for machines. As a result, the tweets of a bot can easily reveal its algorithmic design. Moreover, if someone “doesn’t get” an obvious joke during a conversation or changes subject in an abrupt and unnatural way, one may reasonably doubt if this “someone” is actually a real person.

Generally strange behavior

Examples: Someone follows you and then unfollows you to draw your attention. You immediately receive a personal message (DM) the moment you choose to follow someone. You receive an answer the moment you tweet about something that has to do with a commercial offer. You find yourself followed by accounts with a huge amount of comments on highly varying subjects. You receive personal messages full with ads and commercial content.

Of course, all of the above are actions that may be performed by a natural person too. However, it has been noticed that 5% of active users on Twitter reply with an automated message when someone starts following them. (source: Quora.com, Shameel Abdulla, co-founder & CEO, Cloohawk).

Pro-SYRIZA Bots

1. “Αδεσποτος” retweeted

Sasa Mitta @sasamitta –

Replying to @KoutsopoulosLeo – These ones will return out to the streets. Maybe you will have better luck advertising them??? Two boys and a grumpy girl in the middle. Almost 4 months-old ——–

2. “Αδεσποτος” retweeted Cat Rescue Athens @CatRescueAthens #Donut the sweet and patient cat, patiently waits his turn to find a home of his own… @AdoptDontStop

——–

3.“Αδεσποτος” retweeted

yakinthos kritikos @YakinthosK –

Breaking news: Within the next 48 hours Adonis and Loverdos to be summoned as suspects for the Novartis scandal according to a press report —

BREAKING NEWS

periodista.gr – Breaking news: Within the next 48 hours…

Evening information by ereportaz.gr report that Adonis Georgiadis and Andreas Loverdos are expected to be summoned within the next 48 hours as suspects…

Read More

THE ACCOUNT THAT HAS A SOFT SPOT FOR STRAY ANIMALS

The account “Stray animal” [Adespotos] @adespotos was created in February 2018 and on its profile photo we see a puppy wearing a hat. In 14 months, it has more than 89,700 tweets, 2,650 followers and 940,000 likes, with an unbelievable average of 477 actions per day. All its quantitative features (actions per day, ratio followers/following, number of “likes”, etc.) show that this is an automated account.

Our estimation was confirmed by the qualitative analysis of the comments produced by this account. It is noteworthy that amidst the hundreds of comments per day there are very few original comments or replies to other users. This is a clear case of an automated account, which, while programmed to reproduce others, sometimes interrupts this unending flow with personal comments or replies, giving the impression that there is constantly a natural person behind it.

This bot is followed, among many others, by Dimitris Papadimoulis, member of the European Parliament for SYRIZA. Surfing on its profile, one may notice that this is a socially sensitive “person”, and in particular very fond of animals, stray or otherwise, dogs, cats, rare birds, as well as their protection. Most comments are retweets with these characteristics.

At some point, amidst hundreds non-political comments, we suddenly see a post that supports the Prespa Agreement, with a photo of Tsipras and Zaev.

“Αδεσποτος” retweeted

Kritharistas @metallicaclass –

Guys, this is progress, peace, cooperation. This is the future. Adonis, Mitsotakis, Voridis & Co. are the neo-Nazi snake they feed only to have it bite them too, interrupting their speeches. An obscurantist past and nothing more.

On the same day, we see a retweet that promotes former minister of Tourism Elena Kountoura with an aggressive comment against Olga Kefalogianni and with the hashtag #ΝΔ_ξεφτίλες [#NeaDimokratia_Low lifes].

This hashtag, along with #skaNDala (meaning: New Democracy scandals), also very popular the last few years, is the SYRIZA-inspired reply to the equally popular, New Democracy-inspired #Syriza_Xeftiles [SYRIZA_Low lifes].

“Αδεσποτος” retweeted

el_ges @el_ges –

neaselida.gr/ellada/elena-k…

Elena Kountoura: “Sweeping” Tourism awards – Ready for another.

What’s up @olgakef, Women’s Day again?

#ΝΔ_ξεφτίλες

Elena Kountoura: “Sweeping” Tourism awards – Ready for another.

After being awarded as “Best Minister of Tourism in the World” in the international competition PATWA International Awards, Elena Kountoura…

CHRISTINA WHO LOVES ALEXIS AND AN OLD LEFTIST RADICAL

1. FRATERNITY TROLL @Fraternitytroll –

I don’t know what #Τσιπρας will promise at the #ΔΕΘ2018. But it’s the first time I see a Prime Minister tour-guided for 4 hours, talking to the exhibitors like an average citizen. I also admire him for suppressing his leftist feelings about the USA to honor the president of the country.

MARCH ON, ALEXIS, WE ARE WITH YOU. —

Christina Dim:

BRAVOOO to our prime minister! Credits to him, let him know we are with him!!!

——-

2. User Christina Dim retweeted

Rena Dourou @RenaDourou –

We are in contact with all the qualified services to make their work easier, but also to suspend the perjurers.

Region of Attica: Zero tolerance to corruption…

—— 3. User Christina Dim retweeted

Alexis Charitsis @alexischaritsis –

Inauguration of the #Kanalaki Pargas Sports Hall, amidst a great crowd. In 2015, we found the project abandoned for 11 years, we unblocked it, in cooperation with #Topiki#Aftodioikisi [#Local#Governement] & we financed it to deliver it today to the citizens. ——–

4. niktrah @niktrah –

D. Koutsoumpas: The Communist Party [KKE] will vote against the Prespa Agreement

KKE will vote against the Prespa Agreement because they consider it to be “an agreement that does not help to solve the bilateral problems…”

Christina Dim

@ChristinaDim31

Reply to user @niktrah

Well, we didn’t expect anything better from them!!! They should be ashamed!! Good company, KKE with New Democracy and PASOK!!!

Another example of an account showing automated behaviour in favour of SYRIZA is Christina Dim @ChristinaDim31, which was activated for the first time one month before DETH, on 4 August 2018.

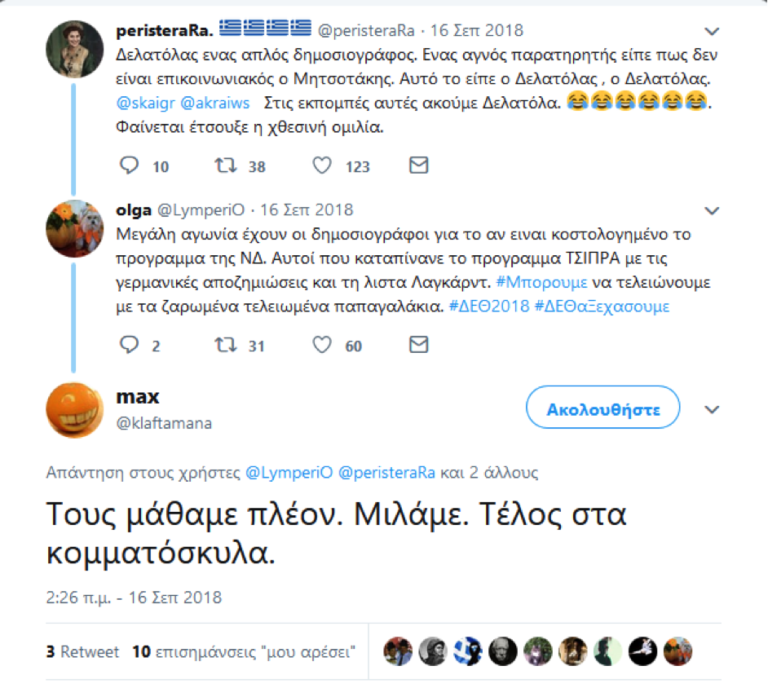

After an initial examination of the activity of this account from the day of its creation until 17 September, we discovered that Christina Dim had been tweeting 504 times per day. From 7 to 17 September, the account tweeted 670 times per day regarding DETH, good enough for 7th place on the list of top commentators about DETH. Its actions are mostly retweets and “likes” in favor of the prime minister and SYRIZA, plus a few original comments that glorify Alexis Tsipras in particular. At the same time, it tweets in highly aggressive terms against the opposition, using quite rude language.

An additional indication of the political affiliation of this automated account with SYRIZA is provided by its replies during the same period to well-known pro-SYRIZA accounts such as The-Roof-is-on-Fire @Roofonfire_aa and niktrah @niktrah, the top commentator during the 2018 DETH.

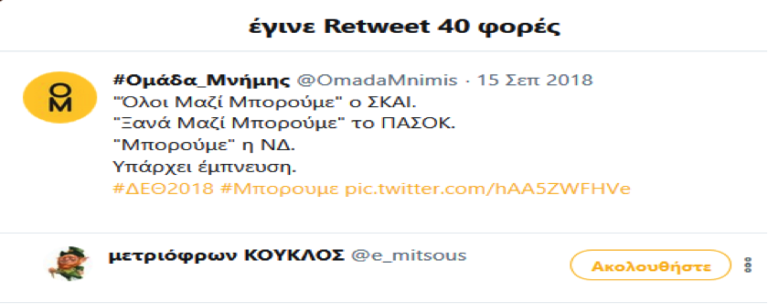

User niktrah does not hide its political affiliation, but it hides its name. Its self-presentation: “Old leftist radical, graduate of the Polytechnic School and member emeritus of the Technical Chamber of Greece. I don’t talk with racists, fascists and flamers”. The account was created back in 2011 and is followed by dozens of members of the government, of SYRIZA, as well as many journalists. In the same cluster we find #Omada_Mnimis [#Memory_Team]@OmadaMnimis. The “Memory Team” (7,595 followers as of 12 May 2019) is the answer of SYRIZA to the infamous “Truth Team” (Omada_Alithias @omadaalithias) of New Democracy (30,900 followers as of 12 May 2019). In March 2019, another similar pro-government account was created, the “Reveal Team” (OMADA_APOKALYPSIS @OApokalypses), as an extra resource in the battle against the “Truth Team”.

Christina Dim continues its intense automated activity until today, with 61,500 tweets and 102,000 likes in its mere 8-month lifespan (as of mid-April 2019). The vast majority of its actions are retweets and likes. Moreover, it reproduces a classic pattern of bot-related activity that we found in many occasions during our research and is also well-documented in the relevant literature: the almost ritual bot-process of saying “good morning” and “goodnight” to real or virtual followers, accompanying these salutations with generic cues and various photos of landscapes, flowers, animals or, more often, women – usually, half-naked.

1. Christina Dim @ChristinaDim31 –

Buddies, my loved ones, I wish you a pleasant evening and a beautiful dawn of the new week, with health and happiness!!!!

———-

2. Christina Dim @ChristinaDim31 – 14 Sep 2018 -Good Morning, I wish to all my beloved friends a beautiful and calm weekend with people who love you and people you love!!! Many kisses!!!

———

3. User Christina Dim retweeted

Nikos Pappas @nikospappas16 –

In the end, the rally in Galatsi had a negative impact on the environment: Mr. Marinakis’ media used tons of ink and paper to downgrade it.

———

4. User Christina Dim retweeted

Rena Dourou @RenaDourou –

We are in contact with all the qualified services to make their work easier, but also to suspend the perjurers.

Region of Attica: Zero tolerance to corruption…

——— User Christina Dim retweeted

Alexis Charitsis @alexischaritsis –

Inauguration of the #Kanalaki Pargas Sports Hall, amidst a great crowd. In 2015, we found the project abandoned for 11 years, we unblocked it, in cooperation with #Topiki#Aftodioikisi [#Local#Governement] & we financed it to deliver it today to the citizens.

———-

5. User Christina Dim retweeted

OMADA APOKALYPSIS @OApokalypses –

PASOK and New Democracy pets, listen what Mister Babis is saying about your historical leaders. New Democracy should honor him and make him a candidate somewhere.

Christina Dim constantly reproduces the content of accounts owned by MPs and members of SYRIZA, as well as ministers of the SYRIZA government, such as N. Pappas, A. Charitsis, D. Papadimoulis, R. Dourou; the account also reproduces the content of the above-mentioned accounts that support SYRIZA, while systematically promoting the content of the accounts of specific websites, such avgi.gr (the website of Avginewspaper) and K. Vaxevanis’ site.

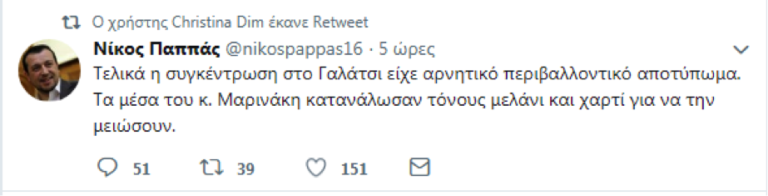

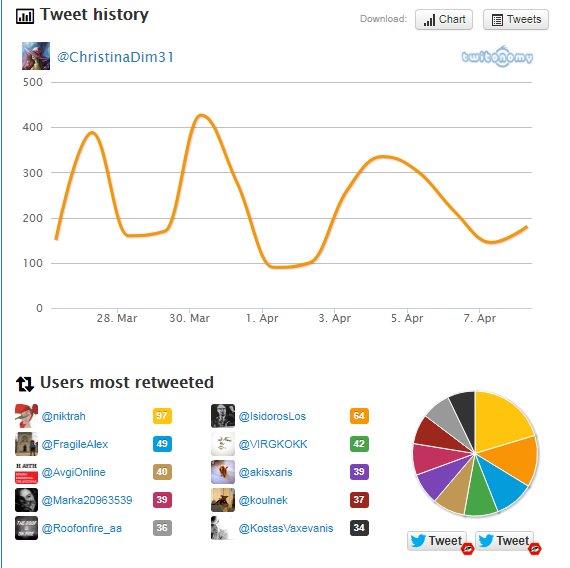

One last indicator: according to twitonomy.com, a tool that provides info about the activity of accounts on Twitter, Christina Dim tweeted 3,195 times in a mere 14 days, from 26 March to 8 April 2019; 98% percent of these tweets (3,139) were retweets of other users, which are represented in the following graph.

Pro-New Democracy bots

The Greek Fighter and Deep Twitter

The account Panagiotis Pavlidis (panagiotispavlidis @47panagotis) is a typical case of a camouflaged automated account that serves a double purpose: targeted and anonymous political propaganda along with commercial promotion. This account with the very common Greek name, with a gull as its profile photo and a horse as its general photo, had as of 30 March 2019 46,500 followers, while it followed itself 48,600 other users.

In the reference period of our research, this user seemed to perform about 502 actions per day on Twitter! Common sense along with the relevant literature agree that it is very rare for a human to perform so many actions within a day. A highly active user usually does not perform more than 40-50 actions per day.

Pavlidis’ followers are literally from all over the world; they speak different languages and, naturally, many of them are also fake or automated account. One eminent Greek politician that follow this account is New Democracy MP and former minister Nikos Dendias.

So what does this bot do? It constantly reproduces tweets by other non-political accounts, adding various and mostly non-political comments of its own, allegedly “profound” dictums, pictures of top models or famous soccer players such as Lionel Messi, images of landscapes, flowers, etc.

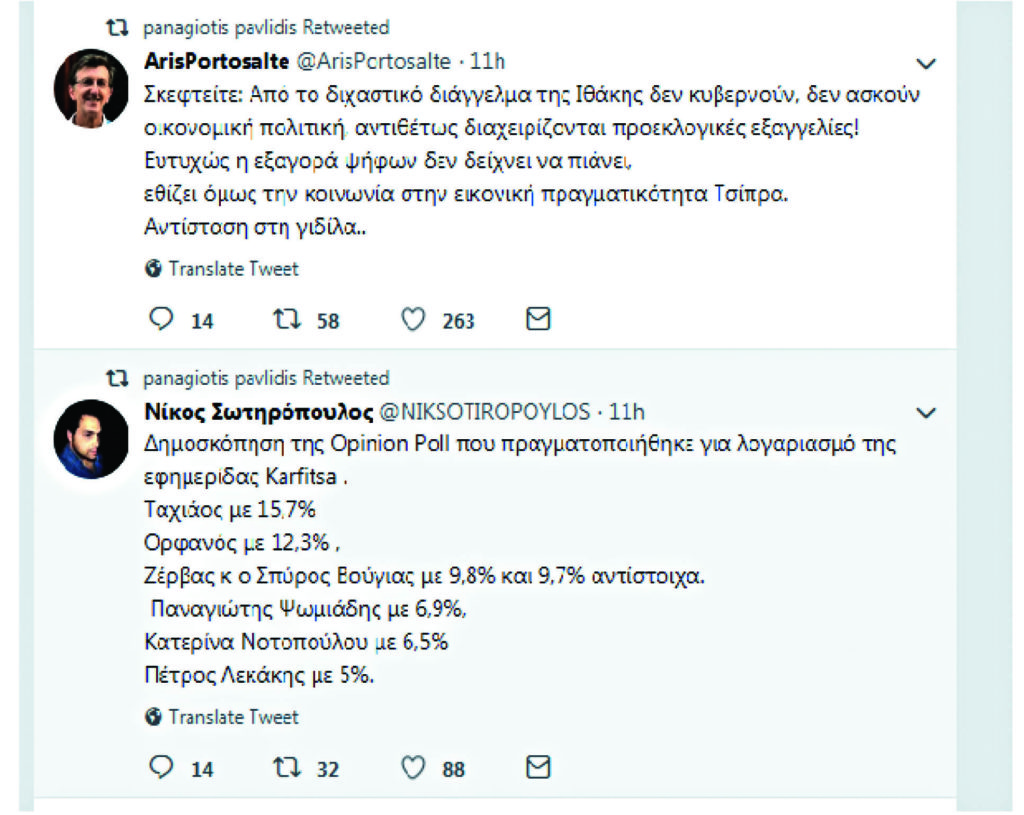

One may easily come to suspect that this account is a bot because of the alphanumerics in its name. But other than that, amidst the top models and the landscapes, we see two tweets pop up, like interstitial political ads – the one by well-known anti-SYRIZA journalist Aris Portosalte, from SKAI TV and radio, and the other by Patras-based Nikos Sotiropoulos, one of the most active supporters of New Democracy.

Read More

1. panagiotis pavlidis Retweeted

Eleni @9ua1kXgxzL6NR5W –

There are many who can tell a woman a fairytale, but only a few who can make her live it. Good morning, my loved ones, have a beautiful weekend full of smile

———

2. panagiotis pavlidis Retweeted

Sofia @Zerod00 –

“All dreams deserve to fulfill themselves… let’s try for them”!

Tasos Leivaditis

Have a nice weekend exactly as you like it, my friends!!

———-

3. panagiotis pavlidis Retweeted

Sakis @GMXUe5DOSZPCM –

Good morning, have a nice weekend!!!!

———–

4. panagiotis pavlidis Retweeted

ArisPortosalte @ArisPortosalte –

Think: After the factionary message from Ithaca, they don’t govern, they don’t implement an economic policy, they just make electoral promises! Luckily, this political bribe doesn’t seem to work. But it makes society accustomed to Tsipras’ virtual reality. Resistance to the rednecks….

On the same day, the bot retweets from another account, adding the following comment:

panagiotis pavlidis Retweeted

Hellenic Fighter @kostas3012 –

Replying to @tarrott

Up there, on the rock, it [i.e., the Greek flag] is painted, Kastelorizo!!!

Good morning!

It is the period of the intense controversy about Kastelorizo, following former minister Tsironis’ declarations. The account with the telling name “Hellenic Fighter” retweeted here is also automated. One needs only look at its quantitative data. On the “Fighter’s” profile picture, we see a Byzantine eagle, a warrior, the Greek flag, Acropolis and Hagia Sophia in Istanbul. The “Fighter’s” motto: “My home… Greece!!! My religion… Christian Orthodox!!! Greece, thank you for having given birth to me!!!”. This nationalist account is followed by the official accounts of Nikos Dendias and Dora Bakogianni.

1. pavlidis @spirosmouries1 –

Good morning all day long! With love and joy…!!!

…Every day is unique and unreatable…!!

Don’t let it pass by without “living” it…!!

…With Love & Smile everywhere…!!

…Imeroviglion, Kihladhes, Greece… ———-

2. Hellenic Fighter Retweeted

Maria @w5Fmb3dIIN6Q8lw –

A SWEET GOOD MORNING TO ALL!!!!!

A LOVELY WEEKEND TO ALL OF US…

Sweet work for everyone…..

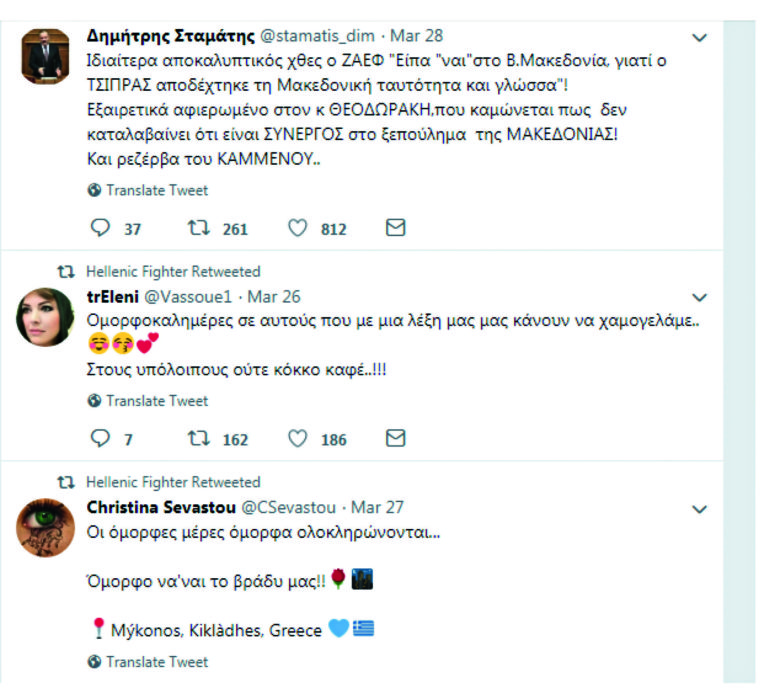

Suddenly, amidst hundreds of irrelevant comments and photos made specifically to draw the attention of thousands of users on Twitter, we see a tweet by New Democracy MP Dimitris Stamatis, who, among other things, is hitting hard at Alexis Tsipras and Stavros Theodorakis for “selling off” Macedonia.

Two days later, on 27 March 2019, the same account reproduces the following tweet, promoting Stelios Kymbouropoulos’ candidacy for the European Parliament with New Democracy and at the same time calling “good-for-nothing bullies” candidates Rallia Christidou, Lydia Koniordou and Petros Kokkalis, all supported by SYRIZA.

During DETH, “Hellenic Fighter”, along with its nationalist slogans and comments, seizes the opportunity to question the republican constitution itself, writing that “in this country, the Republic has failed us”.

1. Dimitris Stamatis @stamatis_dim –

ZAEV was a pure joy yesterday: “I said yes to the name North Macedonia because TSIPRAS acceptet the Macedonian identity and language”. This one goes out to Mr. Theodorakis, who the selling-off of MACEDONIA! Plus, he is a spare tyre of Kammenos…

——–

Hellenic Fighter Retweeted

trEleni @Vassoue1 – A good morning to all those who make us smile with one word…

To all the rest, not even a grain of coffee..!!!

——

Hellenic Fighter Retweeted

Christina Sevastou @CSevastou –

Our beautiful days have a beautiful ending… A good evening to all of us!!

Mykonos, Kikladhes, Greece

———–

2. Hellenic Fighter Retweeted

Lavrentis Beria @LavrentisBeria4 –

There are the good-for-nothing bullies and the ones who really don’t fear anything. You against them all, comrade. You got them. ————

3. Hellenic Fighter @kostas3012 –

Reply to user @vyzantini

This is what happens when you ask for it! You kill the snake early, not when it’s fully grown! In this country, the Republic has failed us!

——-

Hellenic Fighter @kostas3012 – 8 Sep 2018

Another Saturday evening is here…

Have fun wherever you are!!! Have a nice evening! Saturday evening, in its desolation, I am singing to you,

Where are you, can’t you hear me? [Lyrics from a popular Greek song]

BOTNETS CONNECTED TO POLITICAL PARTY ARMIES

The afore-mentioned case is crucial to our investigation because what we have here is an automated account (Hellenic Fighter) with obvious commercial features, which is connected with other clusters of automated accounts (botnets) that, besides reproducing one another, connect to and reproduce the content of an account that clearly is not fake nor automated: the account “Lavrentis Beria”. Anyone who has even a remote knowledge of the Greek political Twitter these last years is surely familiar with this account, which is a typical case of a “celebrity account” with a pseudonym and a large crowd of followers.

ANTI-COMMUNIST TROLLS – RUSSIAN NAMES

The real Lavrentiy Beria was of course the Georgian politician and head of the Soviet secret services (1938-1953), one of Stalin’s closest associates. He was the driving force behind the persecution of the dissidents and the cleansing of the Soviet Communist Party, violently suppressing Stalin’s opponents. After Stalin’s death in 1953, he formed a troika that briefly led the country in Stalin’s place. Soon thereafter, he was accused for treason, he was sentenced to death and he was executed in a hurry. He has been historically accused of rapes, torture, murders, and for the controversial Katyn massacre.

Back to the Greek Twitter. User “Lavrentis Beria” is connected to another “Soviet” active user – coincidentally, another supporter of New Democracy with the pseudonym “Georghy Zhukov”, the name of the Marshal of the Soviet Union during the victorious Soviet war against the Nazis. The real Zhukov became later Stalin’s major enemy and was relieved of his post.

Those two historical personalities inspired these two anti-Communist accounts on Twitter (there are other similar ones too, such as @LeBrezhnev) that keep promoting New Democracy and attacking the government, Alexis Tsipras, SYRIZA and the Left in general. Despite rumors, their administrators’ real names have not been publicly confirmed.

Robots talking to each other

“Paris Tzina” who kisses her friends goodnight and is connected to right-wing influencers

Looking at the profile of the account Paristzina @paristzina, we read its self-presentation as “single, NUTS ANIMAL LOVER VOLUNTEER AT MUNICIPAL ANIMAL WELFARE ASSOCIATION”, born somewhere in San Francisco, California. The content reproduced by the account confirms the “animal-loving” part, since it often retweets popular Greek and foreign accounts promoting animal welfare. It also retweets pictures and videos with happy and cute pets posted by other users on Twitter.

Read More

Besides cute pets, “Paris Tzina” retweets photos of beautiful landscapes, family moments, couples hugging and kissing, and half-naked women, all generated by other automated accounts. In reality, this is a botnet, in which the account Aquarius @tzinaforever, created in January 2018, has 36,600 tweets, 126,000 likes, 9,194 followers and 7,149 followed, posts on a regular basis pictures of half-naked women to draw other users’ attention, while also retweeting standard salutations and generic cues from other accounts.

1. User Paristzina Retweeted

Stray Volunteers @adespotoi –

Brownie, 1-year old, Drahthaar. Found in Larissa. Obviously not for hunting. But lovely for family. Social, tender, accustomed to strap and so playful. 6978121020, Alexandra

——— 2. User Paristzina Retweeted

demy @demymanolakis –

A lovely rainy noon ———–

3. User Paristzina Retweeted

Aquarius @tzinaforever –

A lovely noon…

Similar cases include user “Lena” @lena_lenaki_len, which, according to our data, tweets 1,131 times per day; user “Helen Angel” @AngelAngelopou1, with 935 tweets per day; and user “Marilena” @et_pd4, with 352 actions per day and a female profile photo that is easily detectable on Pinterest, the popular online free-access photo stock.

All these robots pretend to talk to one another and exchange greetings – a typical indicator or bot-related automated activity.

1. User Paristzina retweeted

Marilena @et_pd4 –

Good morning..

A beautiful, relaxed day

Calm, smiley

Happy birthday to all those celebrating their birthday today!!

A lovely Sunday to us all!!

Heraklion, Crete

————-

2. Paristzina@paristzina –

Goodnight, girl

A lovely dawn

———

Marilena @et_pd4

Have a nice, relaxed, calm Tuesday evening.

Good night!!

———

Marilena @et_pd4

Reply to user @paristzina

Goodnight, Tzina..

A lovely dawn!!

In the same context, we singled out the account “Anna” @Anast1257, with which “Paris Tzina” has conversations with the participation – surprise, surprise – of our old friend “Hellenic Fighter” @kostas3012, thus highlighting the complex yet tight structure of a botnet. While showing pictures of cute pets and exchanging profound philosophical verses, some interaction with accounts that spread nationalist hatred seems quite in order…

Paristzina @paristzina –

“I am tired, I am going to bed. I am small and get tired easily. I need sleep to grow up”.

Goodnight, Twitter crowd! Sweet sweet dreams!

——

Anna @Anast1257 –

Have a pleasant evening

——-

Hellenic Fighter @kostas3012 –

Have a nice evening, ladies, and a pleasant dawn!

——-

Anna @Anast1257 –

A pleasant dawn to you too

——

Hellenic Fighter @kostas3012

Reply to users @Anast1257 @pariszina

Thank you very much!

FROM REPRODUCING IMAGES OF ANIMALS AND PHILOSOPHICAL CATCH-PHRASES TO INTERACTING WITH ACCOUNTS SPREADING NATIONALIST HATRED

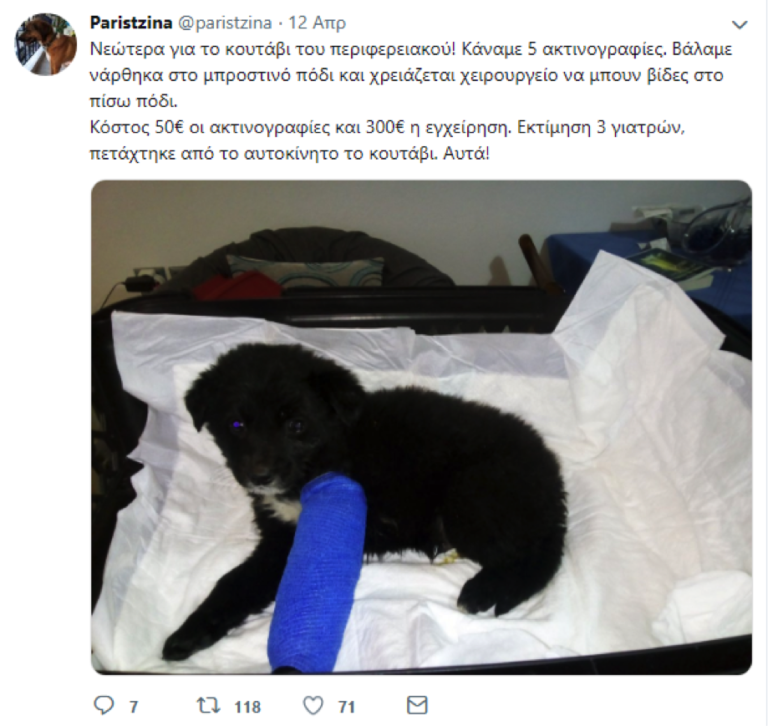

However, the supposedly animal-loving profile of “Paris Tzina” collapses as soon as one finds out its blatant inconsistencies. Take for example a post on 12 April 2019 about a small black dog found abandoned, taken care of and brought to the vet by “Paris Tzina”. The puppy, shown in the picture below, has its leg tied with a big blue splint, and “Paris Tzina” tells its friends that it will take surgery to put screws in another leg.

And yet, just 20 days later, on 2 May 2019, a post shows the exact same picture of the dog, letting us know that it came home “exhausted” after running with the splint (!) under the sun. So we are supposed to believe that the puppy managed to run with this huge splint around its leg, while rehabbing from a surgery that put screws in one of its other legs…

1. Paristzina @paristzina –

Update about the puppy on the belt highway! We took 5 x-rays, put a splint around the front leg, needs surgery to put screws on the back leg. The cost, 50 euros, plus 300 euros for the surgery. According to 3 doctors, the puppy was thrown out of a car. That’s all! ———–

2. Paristzina @paristzina –

Goodnight, Twitter crowd! Exhausted today, running with the splint under the sun! A pleasant dawn to Hermes (not of Praxiteles, the other one, who was thrown out by a “nice” gentleman)!

But the mission given to “Paris Tzina” by its maker is not yet accomplished; the account has also to make some pro-New Democracy political propaganda, retweeting, amidst landscapes and pets, posts by MPs such as D. Stamatis, “liking” Maria Spyraki and Adonis Georgiadis, and regularly reproducing accounts known for their support to New Democracy, such as Georgy Zhukov @GeorgyZhukov, Lavrentis Beria @LavrentisBeria, Petaloudomachos Patra @hatz_patty, Anachoritis PSOFOS @MakeMyDayPunk and George Lee @Harhalas.

1. User Paristzina Retweeted

Dimitris Stamatis @stamatis_dim –

TSIPRAS’ first visit to Skopje, and he called the Thessaloniki MACEDONIA airport.. MIKRA! Remember that his friend BOUTARIS (SOROS’ pal) had proposed to rename it to NIKOS GKALIS! There are no coincidences…

—–

User Paristzina Retweeted

“Blond Frau” –

I went this afternoon to change a pair of shoes, got me another one, no chance…

—-

User Paristzina Retweeted

Georgy Zhukov @GeorgyZhukov –

Tsipras talked about “civil aviation” and (proudly) called the Macedonia Airport “Airport of Mikra”, its name before 1992, when it was renamed to “Macedonia”, as a reaction to the nationalist garbage thrown to our face by the newly-founded back then so-called state of Skopje.

——-

2. Adonis Georgiadis –

Life is a bitch… @KostasVaxevanis Today I feel for you, buddy. Alexis will make you eat your tongue…

——-

3. User Paristzina Retweeted

George Lee @Harhalas –

It was just and it became true!

Pappas’ program “Greece on the Moon” succeeded!

See the landing on the Moon!

——–

4. Petaloudomachos Patra – 28 Mar

Dragasakis thinks that he talks to cretins. They do whatever they like, destroying and selling of a whole Country. They don’t give a damn about the citizens, but they are sure that you will vote for them again. They think you are THAT stupid! So are you or not? —

Meaculpa.gr

Dragasakis: We make unforgivable mistakes but people will vote for us again.

A special mention is in order for the regular reproduction of Nikos Sotiropoulos, a known account that supports New Democracy, owned by a partner of the Patras Commercial Association, a publicist specialized in social media. Our investigation has shown that Sotiropoulos is very active on Twitter, a major influencer that usually makes posts against SYRIZA and has also a wide network of interconnected accounts.

User Paristzina Retweeted

Nikos Sotiropoulos @NIKSOTIROPOULOS –

Petsitis stole also 60,000 euros from FIFA. Jerome Walke received in 2005 a money order of 500,000 euros by the Greek company of broadcasting rights and sports marketing, which amounted to a fee of 60,250 euros to Manolis Petsitis after taxes.

One of these interconnected accounts, Yannis Yannopoulos @giannopoulos35, also from Patras, has on his profile a photo in company of New Democracy MP Adonis Georgiadis, along with the pinned tweet:

Dread and terror for Tsipras & Kammenos’ pets, the Vice-President of our hearts, @AdonisGeorgiadi.

This is why we love Adonis!

That “Paris Tzina” is in fact affiliated with New Democracy is further confirmed by data during DETH, when this account, with an average of 415 actions per day, tweeted regarding DETH a total of 283 times for our reference period, mostly retweeting content against Tsipras and SYRIZA while wishing tirelessly good morning and goodnight to the world…

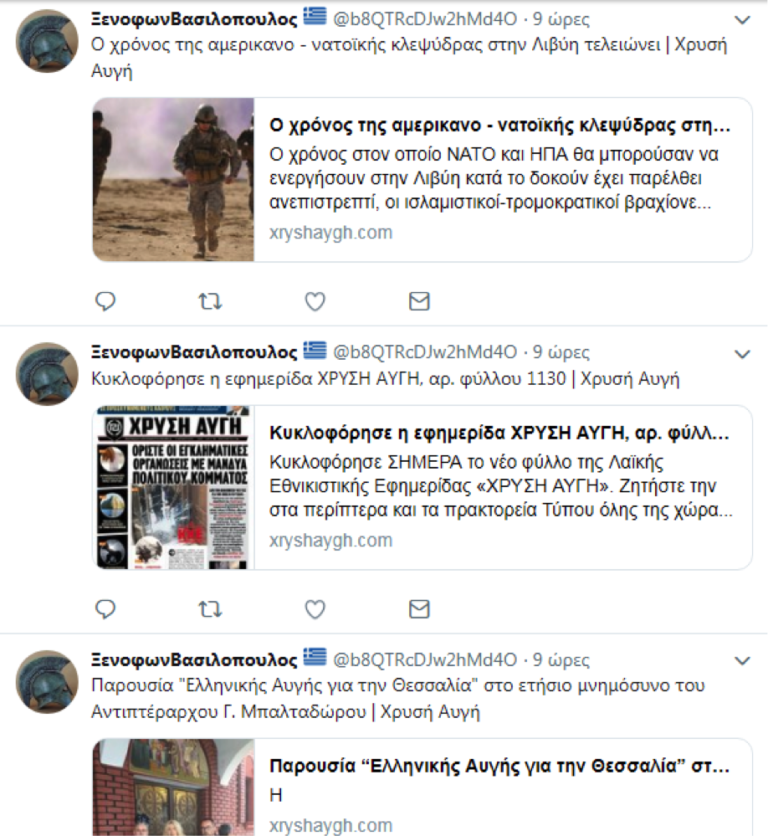

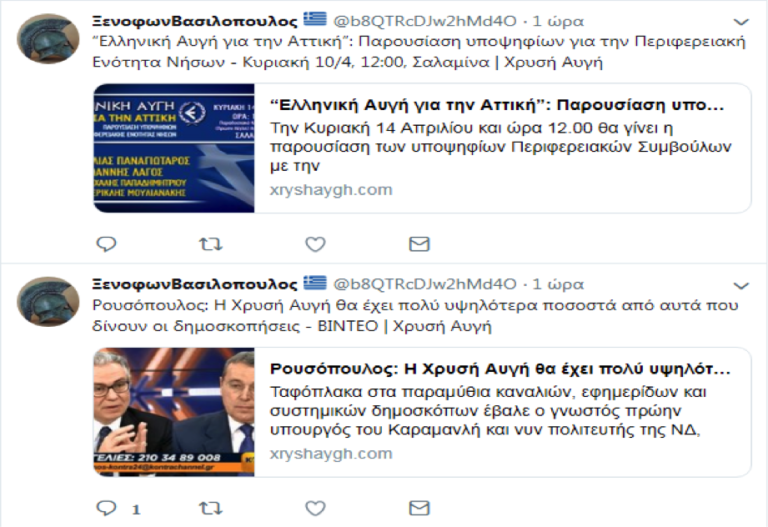

Xenophon Vasilopoulos

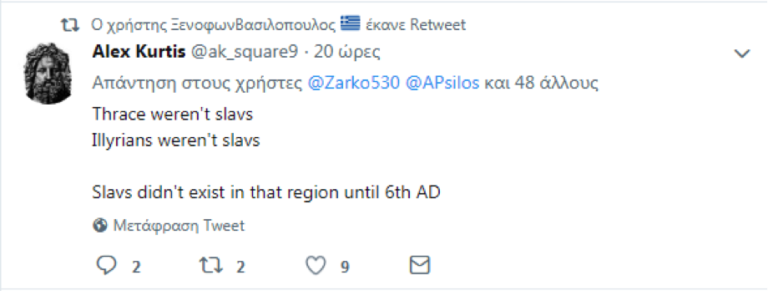

A Bot in the service of Golden Dawn

While examining the accounts with the most actions per day during DETH, we encountered an account named “Xenophon Vasilopoulos” with user name b8QTRcDJw2hMd4O – an obvious product of a generator of alphanumerics. The account tweeted about 715 times per day.

On its profile, one cannot help but notice a huge Greek flag with the Macedonian sun and the shadow of a warrior with a sword on the background; the profile picture is an ancient Greek helmet with the famous phrase Molon labe, “Come and take (them)”.

This is an automated far-right account directly connected with the official website of Golden Dawn and reproducing all the articles posted by the neo-Nazi party.

In just 17 months since its creation, the account has made 193,000 tweets and 130,000 likes, following 5,000 accounts and followed by 1,818.

Read More

The account in question regularly reproduces the content of the personal accounts of Golden Dawn leader Nikos Michaloliakos and Golden Dawn MPs such as E. Zaroulia, I. Kasidiaris, Ch. Pappas, etc.

1. Xenophon Vasilopoulos @b8QTRcDJw2hMd4O –

The time for Americans and the NATO in Libya is up – Golden Dawn

The time when NATO and the USA could act in Libya as they wished has passed, Islamic-terrorist groups…

xryshaygh.com

——-

Xenophon Vasilopoulos @b8QTRcDJw2hMd4O –

Golden Dawn newspaper is out, issue 1130 – Golden Dawn

The new issue of the popular-nationalist newspaper Golden Dawn is out. Find it at news stands throughout the country…

xryshaygh.com

——-

Xenophon Vasilopoulos @b8QTRcDJw2hMd4O –

“Hellenic Dawn” was present in Thessaly, for the annual memorial for Air Marshal G. Baltadoros – Golden Dawn.

xryshaygh.com

—————–

2. Xenophon Vasilopoulos @b8QTRcDJw2hMd4O –

“Hellenic Dawn” for Attica: Presentation of the candidates for the Regional Unit of the Islands – Sunday, 10 April 2019, 12:00, Salamina – Golden Dawn

xryshaygh.com

——

Xenophon Vasilopoulos @b8QTRcDJw2hMd4O

Roussopoulos: Golden Dawn will score much higher than what polls have them | VIDEO – Golden Dawn

xryshaygh.com

———–

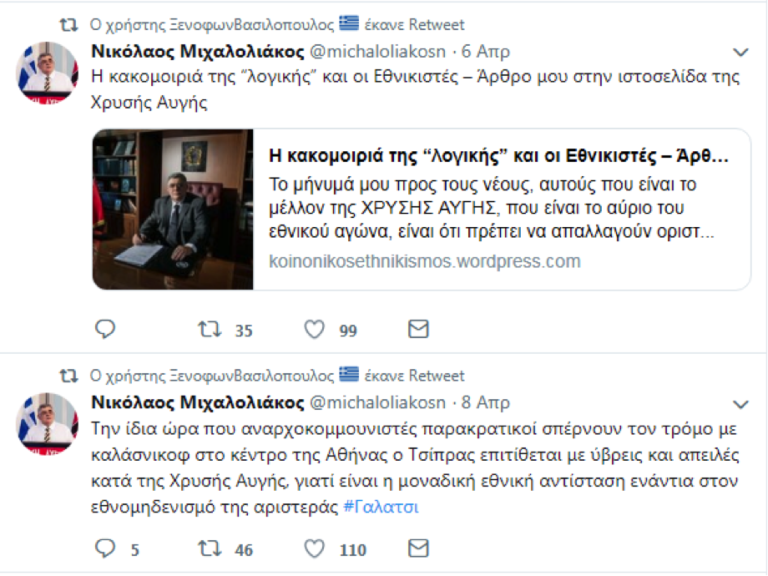

3. User Xenophon Vasilopoulos Retweeted

Nikolaos Michaloliakos @michaloliakosn –

The poverty of “common sense” and the Nationalists – Article of mine on Golden Dawn website

koinonikosethnikismos.wordpress.xom

——–

User Xenophon Vasilopoulos Retweeted

Nikolaos Michaloliakos @michaloliakosn –

While anarcho-communist paramilitary groups terrorize with AK-47s the center of Athens, Tsipras turns against Golden Dawn with insults and threats, because we are the only national resistance to left-wing national nihilism #Galatsi

————

4. User Xenophon Vasilopoulos Retweeted

Ilias Kasidiaris @IliasKasidiaris –

Extra-judicial call serviced to @NChatzinikolaou and @Real.gr: They ban us because they fear the power of Golden Dawn in Athens!

xryshaugh.com

User “Xenophon Vasilopoulos” also reproduces content from other nationalist websites such as ethnikismos.net, from similar accounts (in Greek and English) that dispute with consecutive “historical” posts against “enemy” nationalist accounts from North Macedonia, Albania or other Balkan countries. Such accounts are Elisabet Paschos @Makedni, Alex Kurtis @ak_square 9 (regularly retweeted by “Xenophon Vasilopoulos”), ALE_X_ANDROS @ptolemy_Lagu, Spartiatis @KSpartiatis, and Aitolos @AITWLOS.

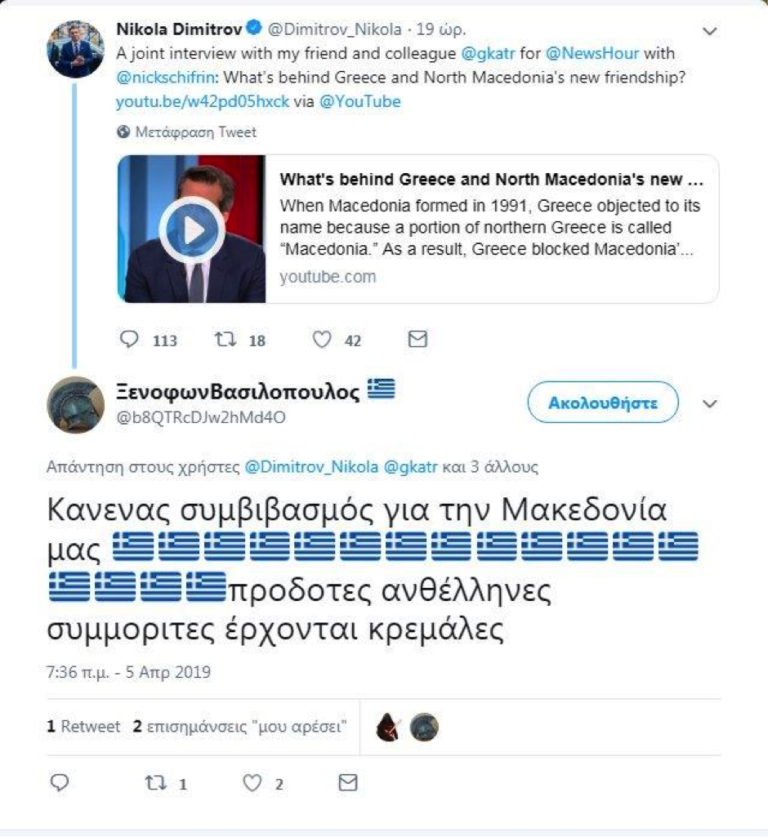

It is noteworthy that the account in question also retweets its own tweets in the few original comments it posts, all nationalistic ones. There are also several attacks against politicians, the apex of which was the days of Tsipras’ visit to North Macedonia in early April. During those days, the account went wild, posting insults and threats against the Greek and Macedonian ministers of Foreign Affairs, Nikola Dimitrov and Georgios Katrougalos, after their joint press conference.

1. User Xenophon Vasilopoulos Retweeted

Alex Kurtis @ak_square9 –

Reply to users @Zarko530 @APsilos and 48 more

——-

2. Xenophon Vasilopoulos @b8QTRcDJw2hMd4O

Reply to users @Dimitrov_Nikola, @gkatr and 3 more –

No compromise about our Macedonia

Traitors anti-Greeks bandits, we will hang you all

The following image shows in white the cluster of Greek far-right accounts. Amidst them all, circled in red, one may see the Golden Dawn bot, “Xenophon”, next to Ilias Kasidiaris’ and Christos Pappas’ accounts.

MODELS, POLITICS AND BOTS

THE LOW-PROFILE HUNK WHO VOTES RESPECTFULLY FOR SYRIZA AND… EIRINI KAZARIAN

What could possibly be the affinity between Minister of Digital Policy Nikos Pappas and “Greece’s Next Top Model” winner Eirini Kazarian? Or between Vaggelis Meimarakis, the leading candidate of New Democracy for the European Parliament, and Yiorgos Danos, the winner of the “Greek Survivor” show? Probably nothing, one would be tempted to answer. In the magical world of Twitter, however, everybody comes together.

During our research in the 3,698 accounts that tweeted about DETH, we observed that there were many users that used to tweet persistently about TV reality shows, quiz shows, and lifestyle shows (GNTM, Survivor, Masterchef, My Style Rocks, Your Face Sounds Familiar, Shopping Star, etc.). However, amidst posts by such users, we also detected a regular and unedited reproduction of political discourse, mostly by MPs and members or the two largest Greek parties, as well as by accounts known to support them on Twitter. On that basis, we formulated the following working hypothesis: Which of the 20,000 accounts that tweeted about #Deth2018 also commented about the final of GNTM in December 2018?

After collecting and interrelating the relevant data, we saw that, out of a total of 8,200 accounts that tweeted about the GNTM final, 1,838 users had also tweeted about DETH from 7 to 17 September 2018. A benevolent observer might conclude that this behavior is more or less normal on social media. But is this actually true?

In fact, amidst these 1,838 accounts, we detected some very interesting cases. After a quantitative and qualitative analysis of their posts, we found out that these cases showed an automated behavior in transmitting two seemingly different messages: the promotion of TV shows and the promotion of the political discourse of the two parties.

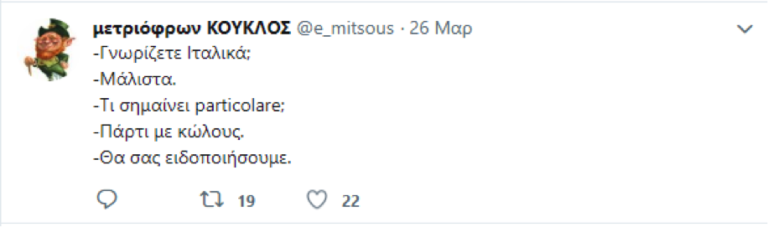

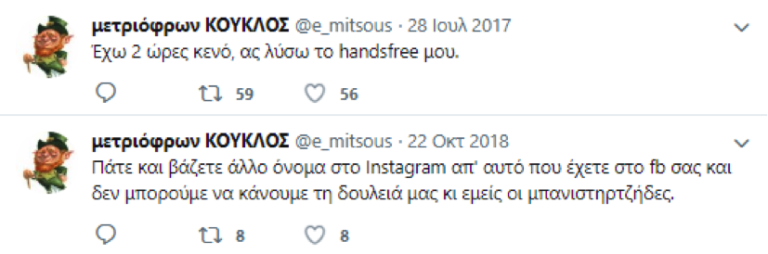

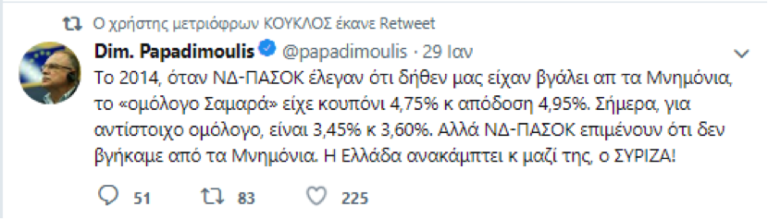

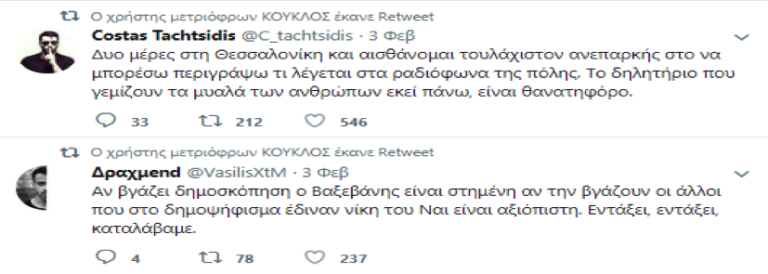

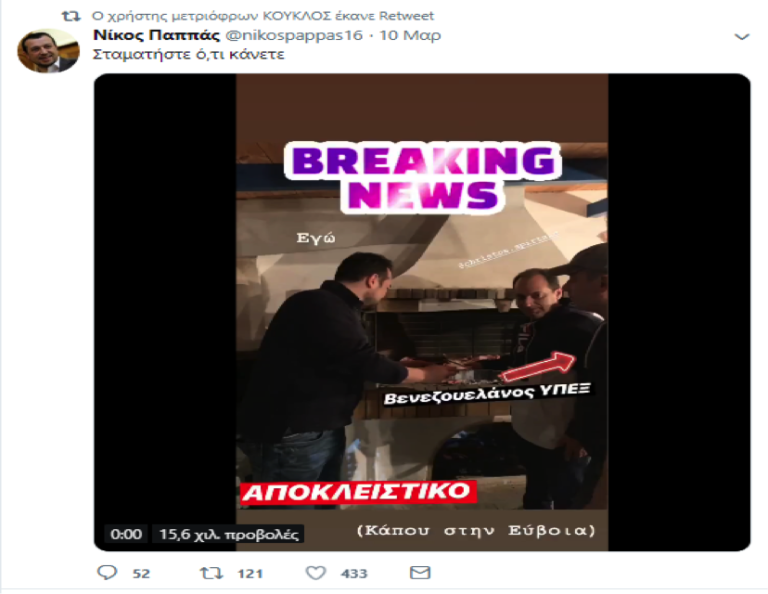

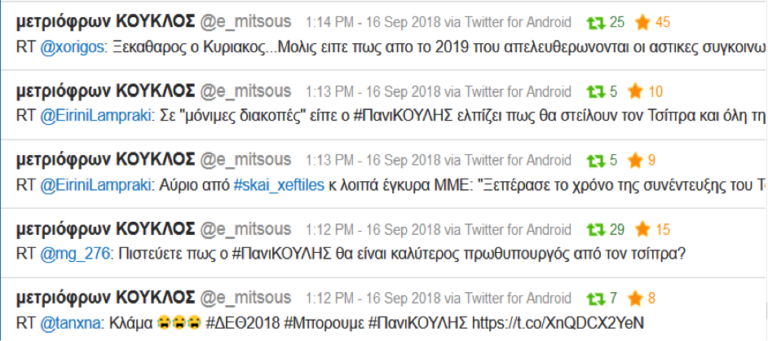

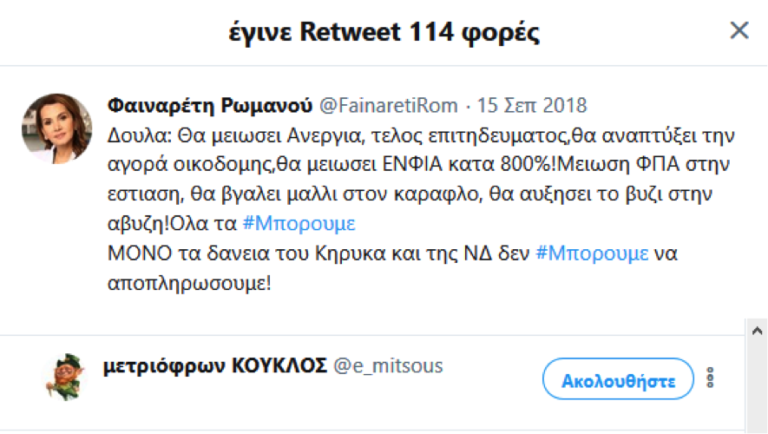

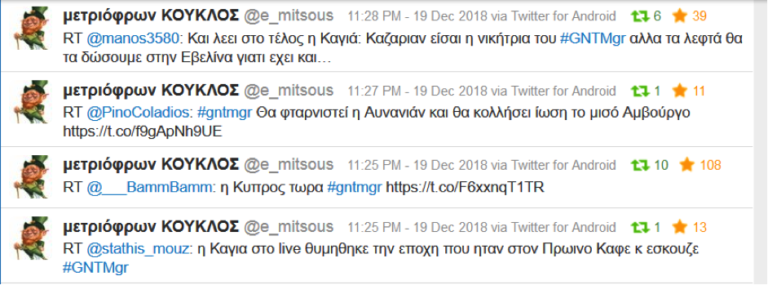

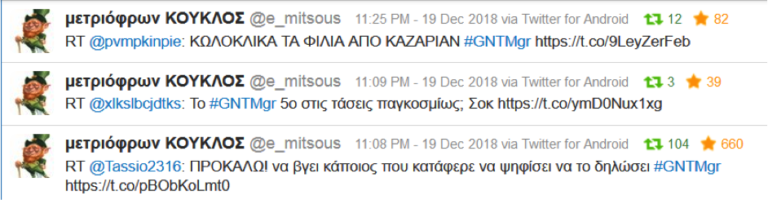

A quite telling case is the account with the peculiar name “Modest Hunk” [μετριόφρων ΚΟΥΚΛΟΣ] @e_mitsous. This user has as its profile picture an Irish troll, and although its quantitative features (20 tweets per day) do not “cry” automation, a careful analysis of its content proves otherwise. To begin with, one sees at first glance that the vast majority of its posts is content reproduced from other accounts, while its rare original tweets are generic cues that are not dependent upon a particular context. Moreover, in all its retweets, it also “likes” at the exact same time the exact same original posts.

1. “Modest Hunk” @e_mitsous –

– Do you speak Italian?

-Yes.

-What does particolare mean?

– A party with colous [assses].

– We will call you.

———-

2. User “Modest Hunk” Retweeted

“Modest Hunk” @e_mitsous –

– Yes please?

– A decuple espresso fredo, black.

—–

User “Modest Hunk” Retweeted

“Modest Hunk” @e_mitsous –

– How many months pregnant are you?

– Oh, I am not pregnant.

————–

3. “Modest Hunk” @e_mitsous –

I have a 2-hour break, I might as well untie my handsfree.

——

“Modest Hunk” @e_mitsous –

You keep using different names on Instagram and on Facebook, so how do you expect us Peeping Toms do our job?

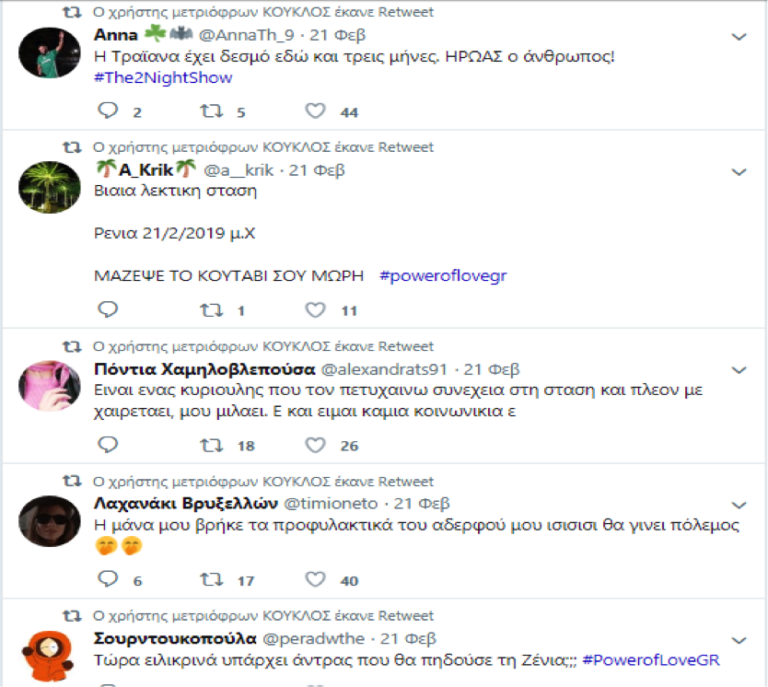

In addition, the user retweets accounts with content relevant to almost all the reality and quiz shows of the Greek TV.

1. User “Modest Hunk” Retweeted

Anna @AnnaTh_9 –

Traïana has an affair for three months now. The guy is a HERO! —-

User “Modest Hunk” Retweeted

A_Krik @a_krik – A verbally abusive stance

Renia, 21/2/2019 AD

KEEP YOUR PUPPY NEAR YOU BITCH #poweroflovegr —-

User “Modest Hunk” Retweeted

“Always Shy” @alexandrats91 –

There is a gentleman that I keep bumping on at the bus stop, and he has even started to talk to me. I am a socialite or what?

—-

User “Modest Hunk” Retweeted

“Brussels Sprout” @timioneto –

My mom found my brother’s condoms war is coming —-

User “Modest Hunk” Retweeted

Sourntoukopoula @peradwthe –

I mean, really, is there a man who would screw Xenia??? #PowerofLoveGR

———————–

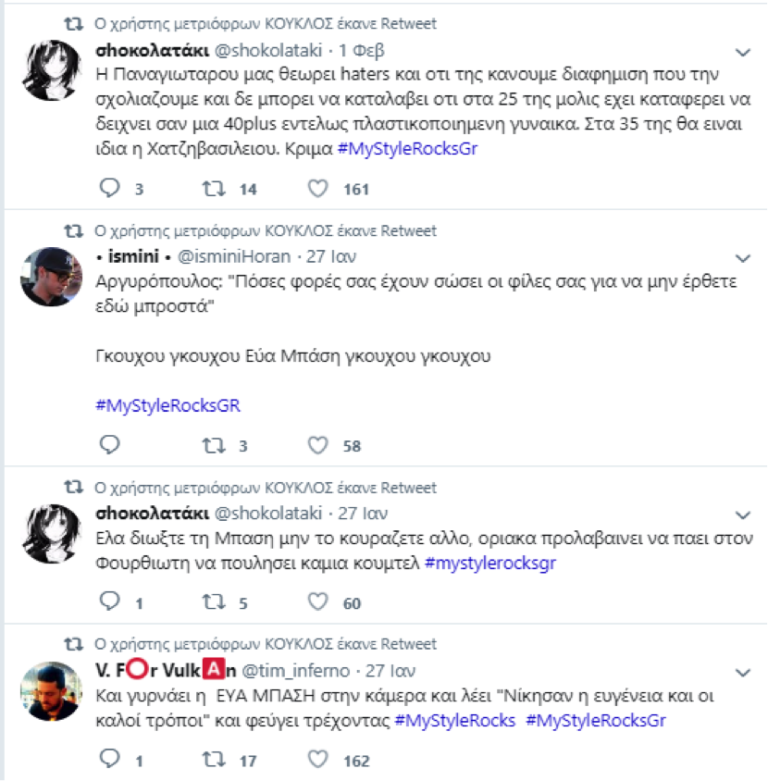

2. User “Modest Hunk” Retweeted

“Chocolate” @shokolataki –

Panagiotarou thinks that we are haters and that we promote her when we talk about her, but she can’t realize that she is 25 and has the looks of a 40plus, totally plasticized woman. In 35, she will look like Chatzivassiliou. What a pity @MyStyleRocksGR

—–

User “Modest Hunk” Retweeted

ismini @isminiHoran –

Argyropoulos: “How many times have your friends saved you by not letting you come up here?”

[cough cough] Eva Bassi [cough cough]

#MyStyleRocksGR

—–